As you can probably guess I’m running a virtualized pfSense on XCP-NG.

I have a LACP from my switch to XCP-NG. this is 4 1GB connections bonded.

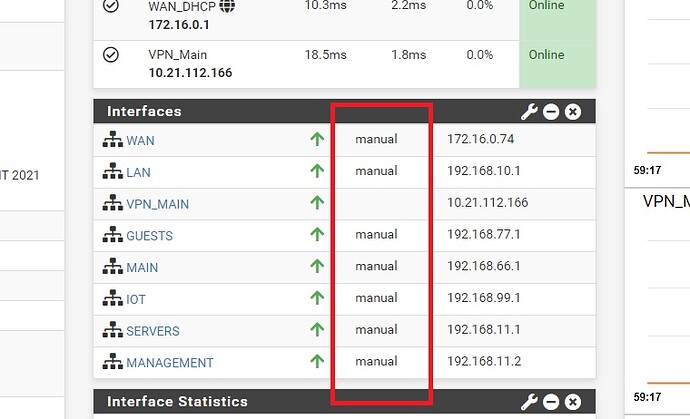

When I pass this to pfSense, it does not see the aggregated speed (in fact it sees no speed and says “manual”) so I’m not sure how it treats it.

Anyway I don’t think I’m getting the benefit of the bonded connection and I was thinking maybe I should use SR-IOV to pass the ports to pfSense and then do the boding within pfSense so it is fully aware.

With LACP I need to configure it on the switch too and I then have a single network on XCP-NG.

With SR-IOV pfSense would have 4 Networks SR-IOV enabled and passed to pfSense which would then bond them (I think?!).

What then becomes unclear to me is how other VMs interact with these SR-IOV connections. what if I want a link aggregation or going to a VM and a VLAN to another.

It feels like I could get in to a mess.

In short, what’s the best way to approach bonded connections in this scenario?

If the goal is more speed, then bonding is not as good of a solution as buying 10G cards. Also, I am not sure how well pfsense will work with virtualized bonded interfaces, not something we have done or consulted on. I mostly push people to hardware based pfsense solutions for production system because it is much more stable.

Thanks Tom.

Yes, the goal is more speed but obviously with in the constraints of LACP.

It’s a home setup, I’m running router on a stick. So I was primarily trying to address that shortcoming of a single 1Gb link.

Adding extra links in to the bond would allow me to have a bit more headroom when various things are going on usually an SMB transfer, backup or similar across a vlan without interrupting the CCTV , streaming, etc. (I’d rather have more bandwidth than QoS limits).

I’ve since read that if you pass SR-IOV and LACP within the VM those connections cannot be used elsewhere, which makes sense now I think about it. So that leaves me with my current LACP to XCP-NG and trying to get that working better.

I was looking at moving to 10Gb but then finances got in the way. When I have the money I hope to put a USW-Aggregation-EU (8 port SFP+ aggregation switch) at the top of my rack and plug my key devices in to that and then have 2 x SFP uplinks to my 24 port switch.

In the mean time I was trying to squeeze more out of my current setup.

Given it’s a 4 port card I will experiment with passing 2 SR-IOV and leaving the other 2 to XCP-NG for VMs because right now, whatever is happening with LACP, it is causing issues for pfSense where I’m losing performance not gaining.

With LACP you get more bandwidth and redundancy, vlans will just work on top of that. If one of the connections fails, you still have the other three up. Not sure you can get much more than that.

If you are trying to squeeze more performance out of what you have you might need to look at traffic shaping.

I get that, but I’m “losing” performance somewhere and I suspect it is how pfSense sees the xcp-ng bonded network. I’ve disabled all the usual offloading and the LACP works, but I’m not getting any benefit. Given it is a router on a stick I would at the very least think it gave me more bi-directional throughput than a single 1Gb link.

I don’t think you have to do too much to setup LACP on physical devices, it kinda works or it doesn’t.

That leaves your virtual machine, maybe the ethernet drivers or vm tools might be the source of the issue.

I’ve got Proxmox with a quad port card on an LACP connection to my switch, it’s a bit weird how that needed to be setup, though that seems to be working without any issues (it’s not running pfsense).

I configured it on my switch and on XCP-NG.

I think the problem comes with pfsense and how it sees that link interface.

When you say drivers do you mean on the pfsense side ? that would seem logical.

I tried SR-IOV to see if I could setup the LACP on pfSense, but that failed and on closer inspection although my pfSense box supports virtualization it does not support SR-IOV so that’s a none starter.

Given it’s a quad card, what I have done for now is put each VLAN on a different 1GB leg which get’s me over the router on a stick problem until I can invest in 10Gb. It doesn’t quite share out the load but its better than it was.

When I mentioned drivers, I was thinking if you have a realtek card pfsense / FreeBSD seems to struggle with them, though a Quad card I would guess you have an Intel.

LACP works without issues on pfSense, so I would guess the source of your issue is either your network card or your vm settings. ThoTugh I guess you’ve accepted the current situation. The last test you could perform is to install pfSense on baremetal and inspect the results.

Yes, I probably should have mentioned its an Intel I350.

I use the inbuilt realtek for management only, incase I bork anything on the main interfaces.

LACP works without issues on pfSense

but I think that is the issue … the LACP is on XCPNG and then I pass through a VIF to pfSense.

whatever it is seeing is not allowing it to get close to even what I would expect.

I suspect if I pass through the hardware (which is why I was exploring SR-IOV) to pfSense and it controls the LACP it will work as expected.

Given SRIOV is not an option the only thinking I could do is pass the whole ethernet card through to pfSense. Which I suppose I could do then establish a virtual network and connect my VMs to it but then I can’t pass anything to the outside world without going through pfSense.

I think I’ll just have to stick with what I have until I go 10Gb.

I suspect this is the issue. I can’t figure out why it did this. pfSense driver. It does the same on single 1gb link now too.

Over the weekend I swapped out the SSD and did a bare metal install as a test. I now have a bare metal adapter visible to pfSense and I get the sort of performance I would expect so the issue is almost certainly how LACP is setup/negotiated within XCP-NG the VIF and pfSense.

This does work nicely now, I just miss the fact I can use snapshots as a really easy way to back-up my pfsense and migrate it between servers when working on one or the other.

I certainly see why Tom recommends 10Gb in the virtualized world. A lot fewer variables to work with when trying to identify and resolve issues and you only have one interface to worry about.