Am looking for some help setting up an internal network between hosts. The main reason for doing this is for Continuous Replication as I would like it to happen internally as currently, it goes out via the WAN and back again.

My setup is as follow:

Two identical servers next to each other in the rack

Server 1 is within its own pool

Server 2 is within its own pool

eth0 is connected to the DC

eth2 is connected to eth2 of the other server

eth 3 is connected to eth3 of the other server

I have created a Bonded connection for eth2 & eth3

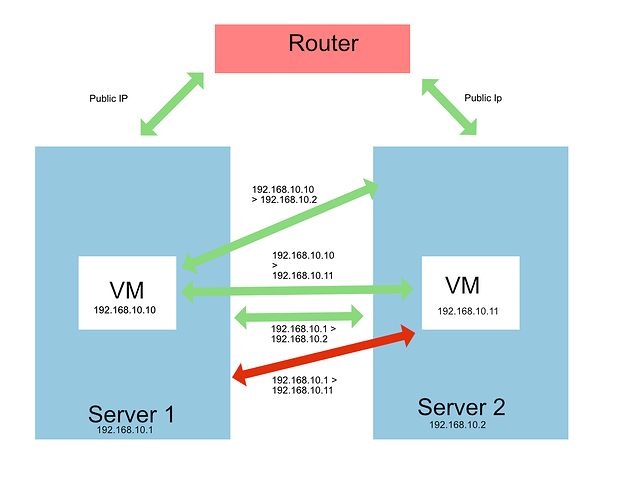

Server 1 has a private IP of 192.168.10.1 (bonded connection)

Server 2 has a Private IP of 192.168.10.2 (bonded connection)

Both hosts have the migration network set to use bonded

When I migrate Server 1 > Server 2 it does so via the bonded connection, the same applies to the other way around.

When I run a Continuous Replication from Server 1 > Server 2 it uses the bonded connection. When I run it the other way around it never completes. Hours later and the progress is still at 0%.

Server 1 can ping Server 2, same the other way.

VMs can’t ping VMs in other Server, but the XO installs can ping each other from server to server.

When the bonded connection is disabled Continuous Replication works both ways via eth0 but the issue is it uses bandwidth since it leaves the DC via the Public IP and back again.

What is the best way to set up the backup to work internally? DC has told me I can create a Private network on eth0 using 192.168.x.x or 10.x.x.x which won’t count towards my bandwidth limit. I added the bonded connection thinking it would work but doesn’t. They also said I can connect both via my own switch but would rather not.

If adding both servers to the same pool helps am happy to do this. Am open to any suggestions and help.

Thank you