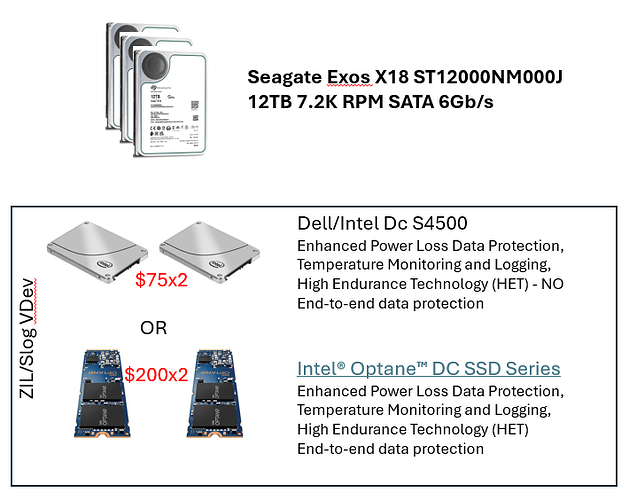

I’m learning more about ZIL/SLOG VDevs and getting hardware optimized.

Seem like a small (in terms of modern disk sizes) with deep queue depths and power protection are important.

I years past, Intel Optane was the leader. But they still seem very expensive.

How much am I giving up by going with an Enterprise SSD over the Optane? The price difference is significant for me. What else is out there as an option? Both prices below are for used devices

Haven’t thought much about it yet but something like that Dell SSD might also be good for a read cache and those two could be used for 1x read cache in each of my two NAS units.

I am planning on having complete hardware copy of this same system at an offsite family members house and will figure out how to do cross-location backups.

Optane for zil only makes a noticeable difference when its for an SSD pool. For HDD you aren’t going to notice because the writes still eventually have to go to the pool.

Hmm, I would have thought that ZIL would have been more important with spinning disks due to the time it takes to move the head around. I do have a lot to learn.

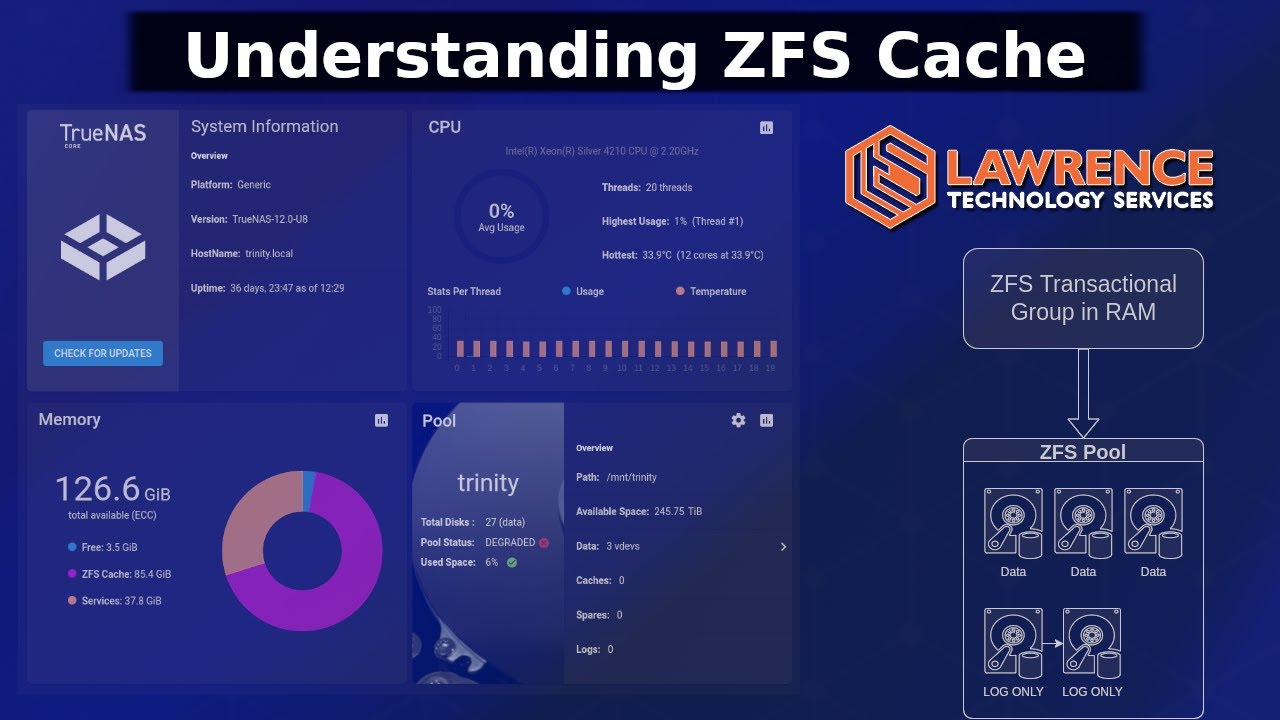

ZIL is not always needed as it depends on your use case and workload.

ZFS caches writes in memory and writes them out to disk every 5 seconds by default. This allows it to turn a certain portion of random writes into sequential, and do some other optimizations. However this means the data not yet written is vulnerable. Operating systems offer a type of write called SYNC or Synchronous that applications can use when they want to make sure data is written permanently before they continue their operations, for example before telling a user that the file is saved. For this purpose all ZFS pools have a ZIL, or ZFS Intent Log, which is used for both SYNC Writes and metadata about the general pool updates. When the current 5 second period ends then it will be written into the pool properly along with any ASYNC writes that happened. If a dedicated ZIL device is not provided, the ZIL will live in a small are of each pool member (32MB if I remember correctly). This means that without a ZIL of any sort, mechanical drives will have to rapidly shift to the ZIL area of the disk whenever a SYNC write or metadata update happens. Therefore unless the workload is only doing ASYNC operations, HDD pools benefit greatly from having a dedicated ZIL to take the sync writes temporarily. But since it is just an HDD pool, it will still have a fairly low write speed limit even for sequential data, so going beyond a SATA SSD for the ZIL is not really noticeable performance wise.

The Dells could fail two or three times and be replaced for the price of the Optane so as long as the mirrored devices don’t fail at the same time then the cost savings are moot. It just seems crazy to be using 960GB disks for a ZIL when 1/10th would be more than enough.

I recall a video Tom did showing the importance of queue depth for real world operational performance but I can never find that metric advertised for drives.

I’m still working on the overall use cases for my NAS as historically I have used mirrored consumer grade SSDs in a variety of Proxmox computers for the VM root partitions and mounting large data items from the NAS. If I continue using my NAS for content storage (and not content editing) then I am hearing the community tell me that maybe I am letting my nerd spec focus overwhelm my practicalness and just skip the vdev. I hear you. If I use containers on my NAS (ie moving Immich and MediaCMS to the NAS) that might change the calculus. Maybe it would be better for me to take the Dells and make them data disks for VMs rather than ZIL in that case.

On the multi-site backups, I bought two of the new Minisforums N5 NAS devices (one Pro for my HomeLab and one Std for my remote site at a family members house). Haven’t designed out the details but the idea is that we each have our locally hosted ‘data’ storage and we back up our data each night at the other person’s device. Early think is to just use Tailscale to access the remote device instead of setting up a more historically common VPN. I am assuming there are standard methods to do TrueNAS scale backups.

Thank you all for your help. I just want to avoid analysis paralysis and get going. Currently I have my disks hanging in an old tower case in a total hack way.

Don’t use consumer drives and expect performance

I rarely see the max queue depth listed in the specs for SSDs. Even on the Intel site. Is it under a different name sometimes?

Max queue depth is mostly on the protocol specification side, i.e. ACH vs NVMe (and what version of NVMe). Drives could support less than their protocol version but I believe that’s uncommon, especially if something is marked for enterprise use. Queue depths beyond 32 aren’t going to be relevant for very many scenarios, and every NVMe drive should support that. For ZIL use you’re unlikely to see beyond maybe 4 in your queue if this is for home / homelab, unless you set something to SYNC writes only.

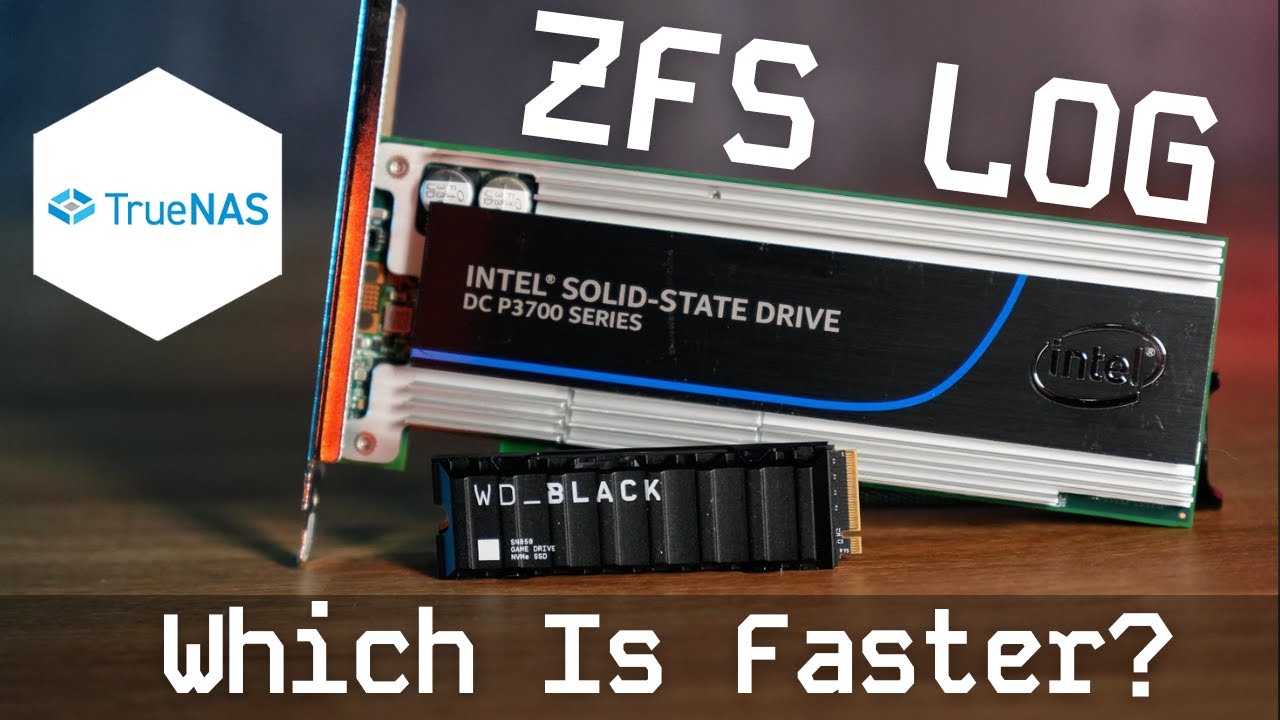

How does that line up with the performance comparison video Tom did? He compared a consumer NVME to the PCI-e Optane and the consumer NVME got smoked due to what I though was the queue depth. Was the issue queue depth or protocol (pcie v nvme)?

That was the worst case scenario with the pool set to Sync Always and with 32 jobs in the benchmark. You’re not going to hit a pool like that without hundreds of clients or a very sadistic workload (maybe a poorly optimized database with heavy write usage).

Both those drives are NVMe - NVMe is a protocol, not a connector type. I was wrong about the number of queues being related to NVMe version but I still would say that for a low usage environment number of queues is not a significant decision factor. There’s lot of other factors like PLP that are going to end up pushing you towards a drive with higher queue depth anyway