My Unifi US-16-XG only gets between 4-5 Gbits speed. I tested between different NIC and servers.

Any idea what maybe the cause ?

In your testing, have you tested different hard drives and/or storage controllers? Without much else to go on, my initial though is you might have some storage bottlenecks.

Might need to elaborate on what hardware is in the different servers.

I’ve had issues with the optimization of some NICs. Specifically, I was getting that kind of performance with a Chelsio T420 and ESXi. The T520 works great though.

I would try a direct connection between two of the boxes to figure out if it’s a NIC or switch issue.

What are you using for your speed testing? File transfers are limited by your disk speed. I believe iperf uses RAM, or at least isn’t limited by disk speed.

If you are consistently getting 4.5G it may be that the motherboard you are using may not have a PCI-e slot capable of 10g or the card you are using has a driver issue.

I tried this between my R720 server with X520 ( VM are installed on SSD) and my PC with ASUS XG-C100C and Synology with 10G card installed. All results are 4-5 Gbits.

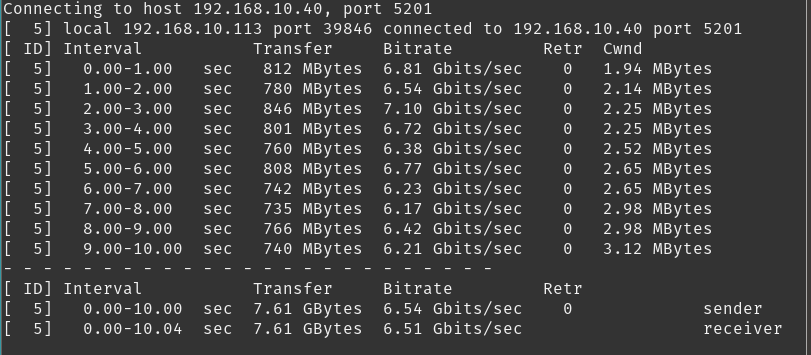

see the screenshot below. it is between 2 VMs on my XCP-ng (R720)

attached is the scrreshot of the speed test between VM on my R720 and Synology NAS with 10G nic

I have no idea what may cause this speed being less than ~9 Gbits

How is you system doing, while you run the tests? Is it CPU/RAM bottle-necked? (Unlikely, but worth checking just in case)

It sounds like you have some driver issues to sort out. I’d start by looking for posts of similar issues with the different NIC/OS combinations.

Can the synology itself put out >5Gb/s? What model, what drives, and what array type?

DS1618+ with 4 HD and 2 SSD acting as a cache.

I have 10G NIC installed in synology

SHR Raid

That should be fine, and especially iperf doesn’t even use the drives so getting a low result there really does indicate a non-storage issue.

Can you try iperf from XCP-ng of the R720, versus in a VM?

In the VMs, are you using a paravirtualized network card? If the network card comes up as “Intel E1000” or some other specific model of legacy network card, then it is using an emulated network card for the VMs. I ask this also because between two VMs on the R720 would be independent of the physical networking hardware and 6.5Gb/s seems slow there.

Its HVM. Let me install the citrix tools and i will try again.

Now I have PVHVM with same results

@darokis, I’m unfamiliar with performance under Xen, but I could believe that whatever network bridge is running on your R720 could be slowing things down - 6.5gbps between vm’s seems slow as was mentioned above. You could set up SR-IOV on the Xen server (I think your HW should support it), but that’s a pain to get working.

Do you have a spare machine with a 10gbe card (either 10gbase-t or sfp+) to test a point-to-point connection? This would rule out any local misconfiguration or computer-based bottlenecks.

You could also increase throughput by bumping the MTU to 9000, but all the hardware and OS’s on that network need to be set for jumbo frames. It’s cheating a bit.

i tested it bewtween my synology DS1618+ with 10G NIC and my PC with same results.

@darokis did you use the switch or was it a direct cable connection between the synology and the PC?

switch. i will try with direct cable

Having some NICs setup to 9000 MTU and some to 1500 may create this issue ?

Im not sure if all my devices are setup to 9000. I know for sure the ones im tessting the spee on are set up to 9000.

One thing you can check is the interrupt moderation. On the X520 card (and all Intel cards, specifically) disabling interrupt moderation will allow the driver to use a larger amount of CPU resources to push more traffic.

I haven’t used Chelsio cards recently, but I’m sure they have a similar setting.

Another thing you can try is to increase the number of Tx/Rx queues.

Personally, I wouldn’t bother adjusting MTU, I’d leave it set to 1500. In some very specific cases, it can improve performance a little bit, if (and only if) all other clients on the network and the switch support jumbo frames, but with how fast modern CPUs are and with all of the hardware offloading functions built into networking adapters, the gains from increasing it aren’t anywhere near as drastic as they used to be 10 years ago. In many cases, it can be detrimental as devices not using the larger packets will cause IP fragmentation and that will hurt performance significantly.

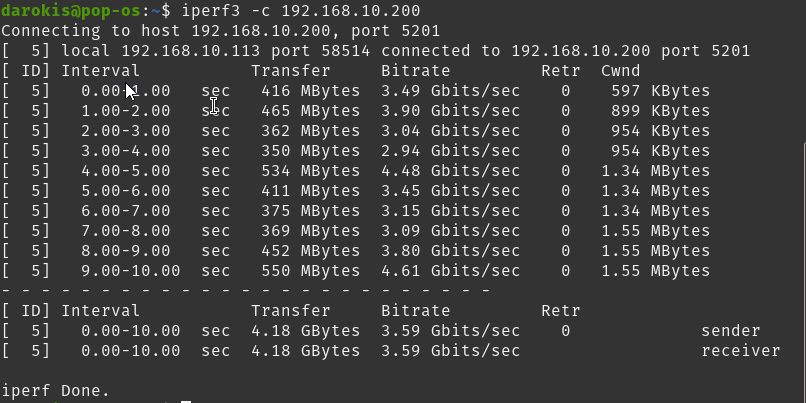

I know this is old but for anyone else that comes across this problem I’ll post the solution. With the Dell servers it is important to change the system profile in the BIOS from DACP which limits power use and theer for performance. Change to OS or Performance. I run my XCP-ng host in Performance mode and get much better speeds went from what you got to;

[09:51 xen-host-01 ~]# iperf3 -c 192.168.120.40

Connecting to host 192.168.120.40, port 5201

[ 4] local 192.168.120.201 port 45480 connected to 192.168.120.40 port 5201

[ ID] Interval Transfer Bandwidth Retr Cwnd

[ 4] 0.00-1.00 sec 816 MBytes 6.85 Gbits/sec 0 1.61 MBytes

[ 4] 1.00-2.00 sec 991 MBytes 8.32 Gbits/sec 0 2.41 MBytes

[ 4] 2.00-3.00 sec 1008 MBytes 8.45 Gbits/sec 0 2.55 MBytes

[ 4] 3.00-4.00 sec 1.03 GBytes 8.82 Gbits/sec 0 2.55 MBytes

[ 4] 4.00-5.00 sec 1.01 GBytes 8.69 Gbits/sec 0 2.55 MBytes

[ 4] 5.00-6.00 sec 1.03 GBytes 8.87 Gbits/sec 0 2.55 MBytes

[ 4] 6.00-7.00 sec 1.03 GBytes 8.83 Gbits/sec 0 2.55 MBytes

[ 4] 7.00-8.00 sec 1.01 GBytes 8.68 Gbits/sec 0 2.55 MBytes

[ 4] 8.00-9.00 sec 1.03 GBytes 8.86 Gbits/sec 0 2.55 MBytes

[ 4] 9.00-10.00 sec 1.03 GBytes 8.88 Gbits/sec 0 2.55 MBytes

[ ID] Interval Transfer Bandwidth Retr

[ 4] 0.00-10.00 sec 9.92 GBytes 8.52 Gbits/sec 0 sender

[ 4] 0.00-10.00 sec 9.92 GBytes 8.52 Gbits/sec receiver

iperf Done.

I’m unfortunately seeing the same degradation of speed. I’m testing between an iMac 10G adapter (SonnetTech Thunderbolt 2 adapter), pfSense XG-1541, and Synology RS1221+ with dual SFP+ ports via PCI card (E10G21-F2).

I’ve messed with flow control and tried various adjustments but cannot get more than 7G (usually 6G). The SFP+ cables are Ubiquiti DAC cables (all 1 m except the NAS cables being 2 m). My iMac is connected via one of the 10G RJ45 ports.

Any thoughts would be apprecaited.