Hi all

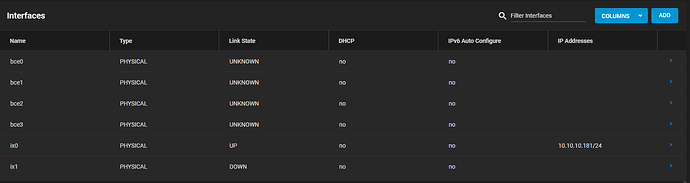

I’m slowly moving my server side of things over to 10gig, and one of the first ones that I tackled with my Truenas box.

To give some back ground, it’s a Dell T710 box, with a bunch of 12TB sata drives and 192GB of ram. Covering the elephant in the room, the pools are not set up correctly. I set them up before I knew any better so the 8 drives are in 2 physical hardware Raid5’s and then the ZFS is on top of that… I know it’s horrible, and I have to change it but I haven’t gotten around to where to put the 80+ TB of stuff, in order to rebuild it (first world problems I guess lol).

Anyway, the Box was on a 1gig connection, on that connection I get sustained 80-90MB/s transfers to my desktop also on 1gig which is what I would expect , connected to my cisco switch.

When I replaced it with the X520-DA2, and using FS.com DAC cable and run it into the ubiquiti switch the transfers to the same far end box (my desktop on 1gig port) drop from the expected 80-90MB/s down to 300-700Kb/s , 1MB/ if I’m super lucky and they bounce around up and down and occasionally stop and then continue.

The card is plugged into an 8X slot , but in either way I’m not trying to get the full 10gig , but having a the drop to under 1MB/s is just like going back to dial up lol…

I’ve tried a bunch of different tuning settings so far with no improvement.

An Iperf run from the client side shows this .

F:\iperf-3.1.3-win64>iperf3.exe -c 10.10.10.181 -P 1

Connecting to host nas1, port 5201

[ 4] local 10.10.10.20 port 55629 connected to 10.10.10.181 port 5201

[ ID] Interval Transfer Bandwidth

[ 4] 0.00-1.00 sec 106 MBytes 888 Mbits/sec

[ 4] 1.00-2.00 sec 98.9 MBytes 829 Mbits/sec

[ 4] 2.00-3.00 sec 96.0 MBytes 805 Mbits/sec

[ 4] 3.00-4.00 sec 100 MBytes 840 Mbits/sec

[ 4] 4.00-5.00 sec 104 MBytes 874 Mbits/sec

[ 4] 5.00-6.00 sec 91.8 MBytes 769 Mbits/sec

[ 4] 6.00-7.00 sec 92.9 MBytes 780 Mbits/sec

[ 4] 7.00-8.00 sec 101 MBytes 845 Mbits/sec

[ 4] 8.00-9.00 sec 91.0 MBytes 763 Mbits/sec

[ 4] 9.00-10.00 sec 99.9 MBytes 838 Mbits/sec

[ ID] Interval Transfer Bandwidth

[ 4] 0.00-10.00 sec 981 MBytes 823 Mbits/sec sender

[ 4] 0.00-10.00 sec 981 MBytes 823 Mbits/sec receiver

from the server side

Server listening on 5201 (test #1)

Accepted connection from 10.10.10.20, port 55628

[ 5] local 10.10.10.181 port 5201 connected to 10.10.10.20 port 55629

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 106 MBytes 886 Mbits/sec

[ 5] 1.00-2.00 sec 98.5 MBytes 826 Mbits/sec

[ 5] 2.00-3.00 sec 96.1 MBytes 806 Mbits/sec

[ 5] 3.00-4.00 sec 100 MBytes 840 Mbits/sec

[ 5] 4.00-5.00 sec 104 MBytes 873 Mbits/sec

[ 5] 5.00-6.00 sec 91.7 MBytes 769 Mbits/sec

[ 5] 6.00-7.00 sec 93.1 MBytes 781 Mbits/sec

[ 5] 7.00-8.00 sec 101 MBytes 844 Mbits/sec

[ 5] 8.00-9.00 sec 91.1 MBytes 764 Mbits/sec

[ 5] 9.00-10.00 sec 99.8 MBytes 837 Mbits/sec

[ 5] 10.00-10.00 sec 384 KBytes 953 Mbits/sec

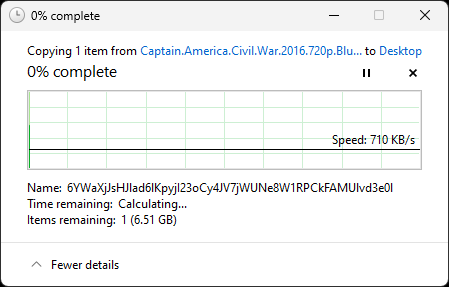

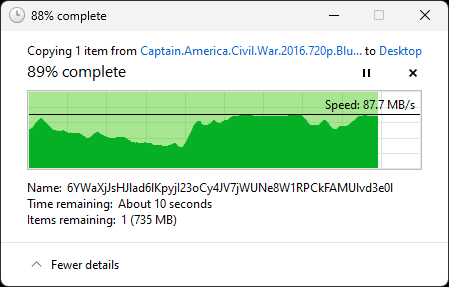

and some windows pictures

on 10gig

but on 1gig

and I had a powershell script that I use to backup to an SSD and the same difference is there too

10gig

Speed : 3,191,324 Bytes/sec.

Speed : 182.609 MegaBytes/min.

1GIG

Speed : 73,373,607 Bytes/sec.

Speed : 4,198.472 MegaBytes/min.

Everything I saw on line seems to point to people not getting like the upper end of speed band but very little if anything that is showing this level of performance. I don’t expect to be able to squeeze every last bit out of the 10gig , not looking for that in any way.

I just donno…

Tks to all for your time and comments ![]()