My array has been having issues for some time (over a year) now with drives becoming unavailable and errors but the drives are all good when I do tests in windows so I’ve been reading them to the array

current system is

- 2 vdevs of 10x WD red pro 20TB in raid-z2

- lsi 9305-24i HBA

- AMD Epic 7443P

- ASRock ROMED8-2T motherboard

- 512GB ECC Ram

- Silverstone RM43-320-RS case

I’ve tried another 9305 HBA, sas cables, cases (i switched from norco 4224), re-setting the cpu. but this problem keeps prosisting

Hell broke loose yesterday

the pool went offline, restarts haven’t restored it.

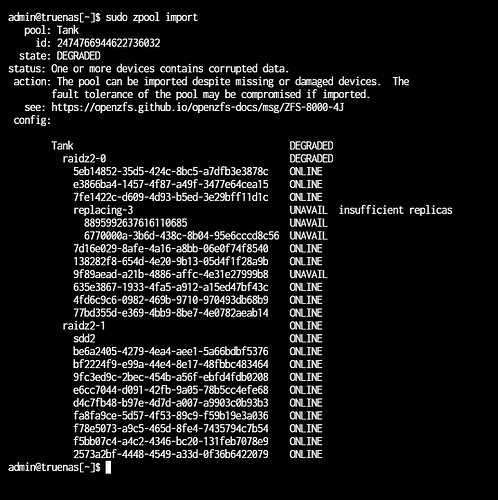

As you can see from the image 2* drives are offline and 1 was in the process of replacing itself. 8 out of 10 drives still should be able to continue with a raid-z2 vdev but when i go to import the pool it doesnt give me the option in the gui so I tried using zpool import in the shell but i get the following error

How screwed am I? What can I do to recover my data? (of course no backups)

Only 8TB of the 120TB used is what I need to get off as it can’t be replaced

Over the course of the year I have replaced every single compnet in the system, i’ve tryed 2 differnt HBA’s new sas cables, new case (backplanes) fresh truenas install, new cpu platform, I did have the same issue with 8TB drives so upgraded to 20TB drives, I have ran out of things to replace.

Yes I know I should have had backups but I hadn’t got to the root of the problem with all the “old” parts so once I had I was going to use them to make a backup system

It is hard to backup when you have so much

1 is none and 2 is 1

Ive tred zpool import -f -o readonly=on -R /mnt Tank and I was able to see the datasets but wasn’t able to see the pool on the stoage page or create a share that I could use to access the data

[EINVAL] sharingsmb_create.path_local: The path must reside within a pool mount point

Error: Traceback (most recent call last):

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 198, in call_method

result = await self.middleware.call_with_audit(message[‘method’], serviceobj, methodobj, params, self)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1466, in call_with_audit

result = await self._call(method, serviceobj, methodobj, params, app=app,

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1417, in _call

return await methodobj(*prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/service/crud_service.py”, line 179, in create

return await self.middleware._call(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1417, in _call

return await methodobj(*prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/service/crud_service.py”, line 210, in nf

rv = await func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/schema/processor.py”, line 47, in nf

res = await f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/schema/processor.py”, line 187, in nf

return await func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/plugins/smb.py”, line 1022, in do_create

verrors.check()

File “/usr/lib/python3/dist-packages/middlewared/service_exception.py”, line 70, in check

raise self

middlewared.service_exception.ValidationErrors: [EINVAL] sharingsmb_create.path_local: The path must reside within a pool