Thanks for all your excellent videos @LTS_Tom! I’ve watched SO many, and yet I can’t quite crack this nut. Searched the boards here and on ixSystems too.

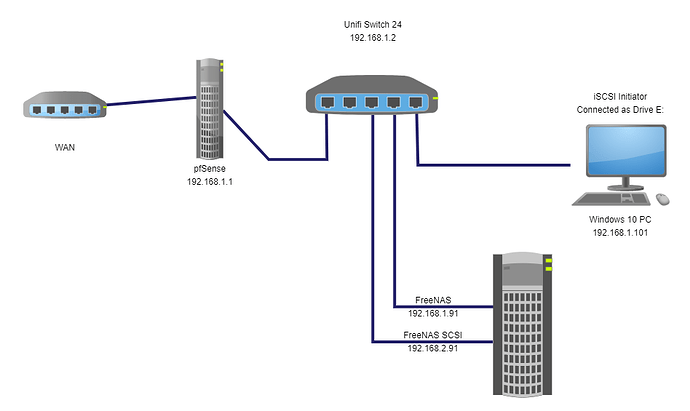

It occurred to me that I could use the extra ports in my FreeNAS box to physically separate the iSCSI service from the rest of the services, and my first pass was to just assign the next free address to the iSCSI service. FreeNAS runs on igb0 at 192.168.1.91, so I added the second port (igb1) on 192.168.1.92. Worked like a champ. Was able to reconnect the iSCSI Initiator no problem, BUT in practice I was seeing traffic on both interfaces in FreeNAS (Tx on igb0 nearly mirrored Rx on igb1). Should not have seen anything on igb0 in theory because I was not doing anything else… BUT apparently this is a big no-no as explained pretty thoroughly here on ixSystems:

So, I moved igb1 to 192.168.91.91 (just to make it easy to spot with the obvious 91s) and figured I would have pfSense route the traffic between. Multiple benefits here (maybe): 1) avoiding the problem Tom mentioned about having iSCSI and SMB traffic bring down the interface with a “no ping reply” timeout from the watchdog (it’s true - I’ve lived it). 2) isolating my FreeNAS box from the Internet. 3) Increasing performance by having a separate gigabit link?

Cannot get it to work. Referenced the “How To Setup VLANS With pfsense & UniFI. Also how to build for firewall rules for VLANS in pfsese [sic]” video as a guide, and added a VLAN 91 on pfSense LAN Interface (called it iSCSI) and then made firewall rules to route “any” from iSCSI net to LAN net and the reverse. I have Block private and Bogons turned off. Still can’t ping from x.x.1.x to x.x.91.x. Totally stuck.

Big question: Is this the best way to do this from a FreeNAS iSCSI standpoint, and if so, what might I be doing wrong?

Could you draw a simple schematic on how the network is?

I can’t really understand where the iSCSI initiator is in the setup.

I would not route any storage based traffic such as iSCSI or NFS because of potential performance issues. You should keep all IPs on the same network so it is only switched between interfaces.

1 Like

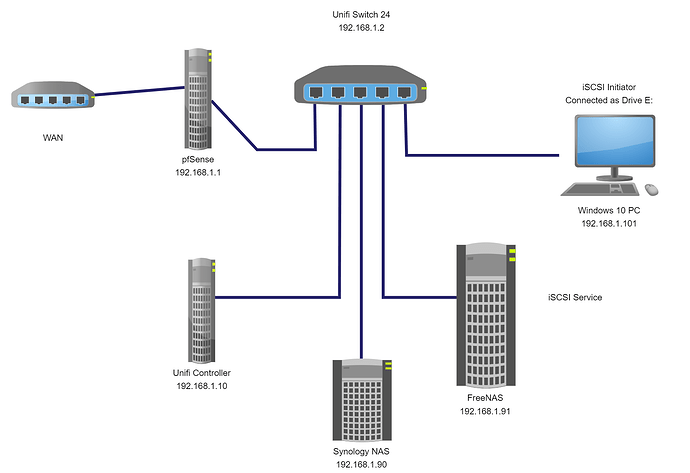

Definitely. I did kind of leave that out from a topology standpoint. It’s a super basic setup. I’m using the iSCSI volume as a Drive E: for my Windows machine mostly for large files that I don’t want on my internal SSD. I want the files safe and handy with snapshot ability, but I don’t need instant access. Videos, games, eBooks, etc. Very similar to Tom’s use case here. The diagram was one I already had, so just ignore the Unifi Controller and the Synology.

The pfSense box and the FreeNAS server both have quad Intel NICs. Assuming I want to get the most out of the hardware, is there a better configuration than just letting the iSCSI server run on the main FreeNAS igb0 interface/IP and leaving the other 3 ports idle? I could revert back to the single port configuration, but there is definitely an issue when it’s under load. In fact, between my first post and this one, I started a robocopy, but FreeNAS went into brain lock about half way through 60g. Could not access over the network and the console was dead.

Main question: Any suggestions for optimizing/maximizing this type of setup?

Side question: who has seen the FreeNAS lockup problem besides me and @LTS_Tom?

Taking this right back to basics, is your Windows 10 PC multi-homed? Does it have a second NIC available (or could you add one in) to run your storage traffic? Then there’s no need to involve pfSense in routing your storage traffic across VLANs.

Create a separate VLAN on your UniFi network for your storage traffic and then assign switch ports for the traffic. Then it’s contained within its own isolated network without a route to the Internet; job done.

Can’t say I’ve seen the FreeNAS lockup issue as I’ve always put iSCSI traffic on its own storage VLAN and set static IP addresses on those interfaces.

1 Like

Interesting idea. I could add a card. It’s only my PC that needs the iSCSI. But it’s no picnic to run the two extra cables.

So, academically, how would I do it with software?

I have to admit I have never done this myself, but I did find this interesting article and it looks like it is possible if running Win10; http://www.wensley.org.uk/vlan. It looks like you need to stand up a virtual switch with Hyper-V on your desktop and then you can create your sub-interfaces and vlan tagging for those ports. The vswitch would then use your physical NIC as a trunk to the switch.

Personally when I have multiple interfaces, I run physical connections to the switch and tag them at the access port. Good luck!

1 Like

Dang it. I can’t believe it’s not easier than this. Here’s the dead simple diagram of the idea:

As a baby step test, if I add a virtual IP in pfSense on a different subnet, Windows happily pings that address, so why would I need a separate VM (or a separate physical interface) just to connect to a thing on another subnet? I think

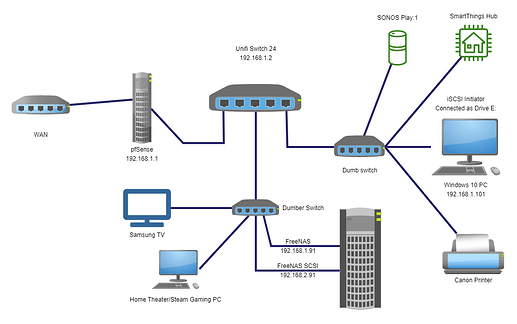

@cairnsmaterio is on the right track with the VLAN config. I went as far as defining the VLAN before, but did not configure the Unifi switch ports. My devices are actually one more dumb switch away from each other on both ends. So technically it looks like this:

This might be another can of worms because not every device on the respective legs would live in the same VLAN… unless it means I need managed switches in place of the dumb ones. Isn’t this analogous to accessing an IP camera or doorbell on another subnet from my Windows PC?

I can’t bear this being over my head, but then again I have not been technical professionally for many years and was never a “networking professional”. Very much an ex-coder, weekend warrior, and endless tinkerer. Love home automation, gaming, and media, so this just ends up being an extension of that, but keep hitting performance limitations that I hate. Any other suggestions on the setup before I fire up the VLAN option again? This is my first VLAN, and I thought iSCSI would be the easy one before I tackled walling off my IoT devices, so I really appreciate the help!!

Without the mess of diagram #2 (because I can wire around the dumb switches), how does a mere mortal solve for the problem in diagram #1?

Backup plan: if I’m going to run any wires, it’s going to be a 10Gbe upgrade directly between my PC and FreeNAS.

Aside: Locked it up again… only happens under load. It will run for weeks and weeks if I don’t push it, and it’s the deadly combo of SMB and iSCSI running full tilt that does it.