Hello,

I hope this e-mail finds everyone well and everyone is able to manage all of this snow we are getting here. I think Buffalo, NY has over 4x feet in some areas after just 24-36 hours.

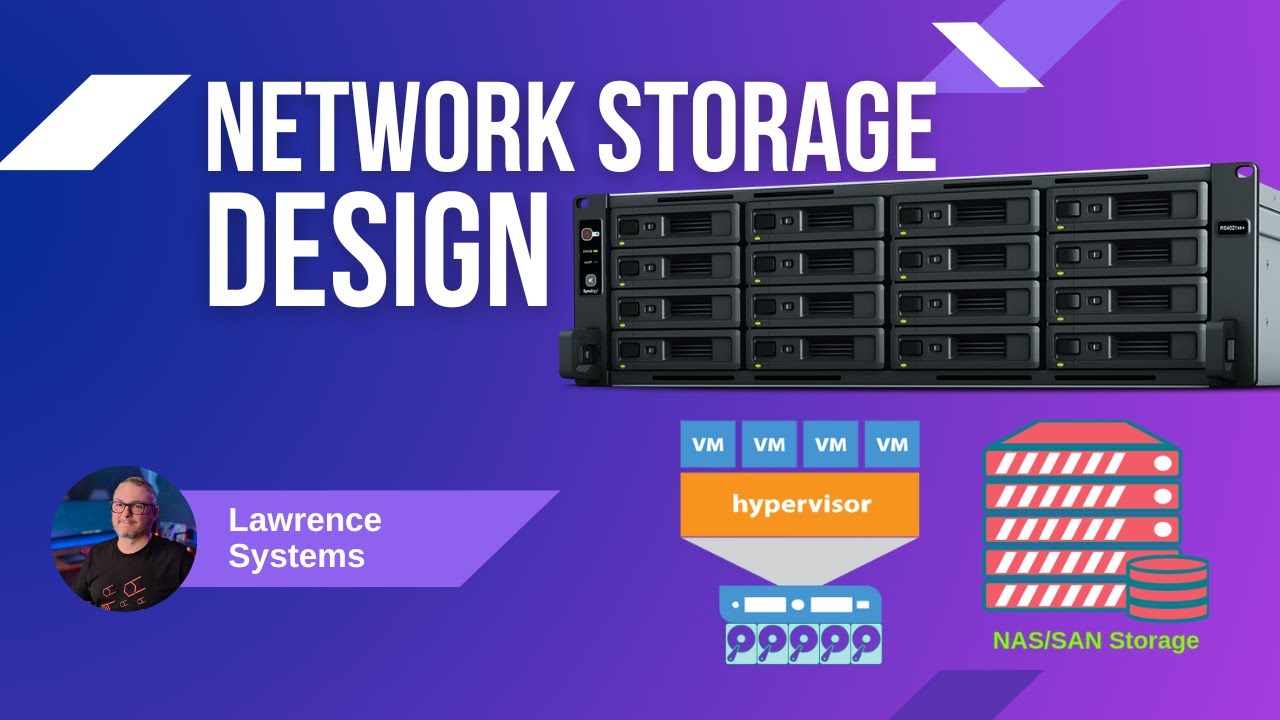

We have individual 1U rack mounted servers from Dell and Lenovo. The Dells are RAID1 and the Lenovo are RAID5. We are currently buying more Lenovo servers and we would like to make the move to fiber-based storage. This will make it easier when we migrate to XCP_ng from ESXi. That’s our goal.

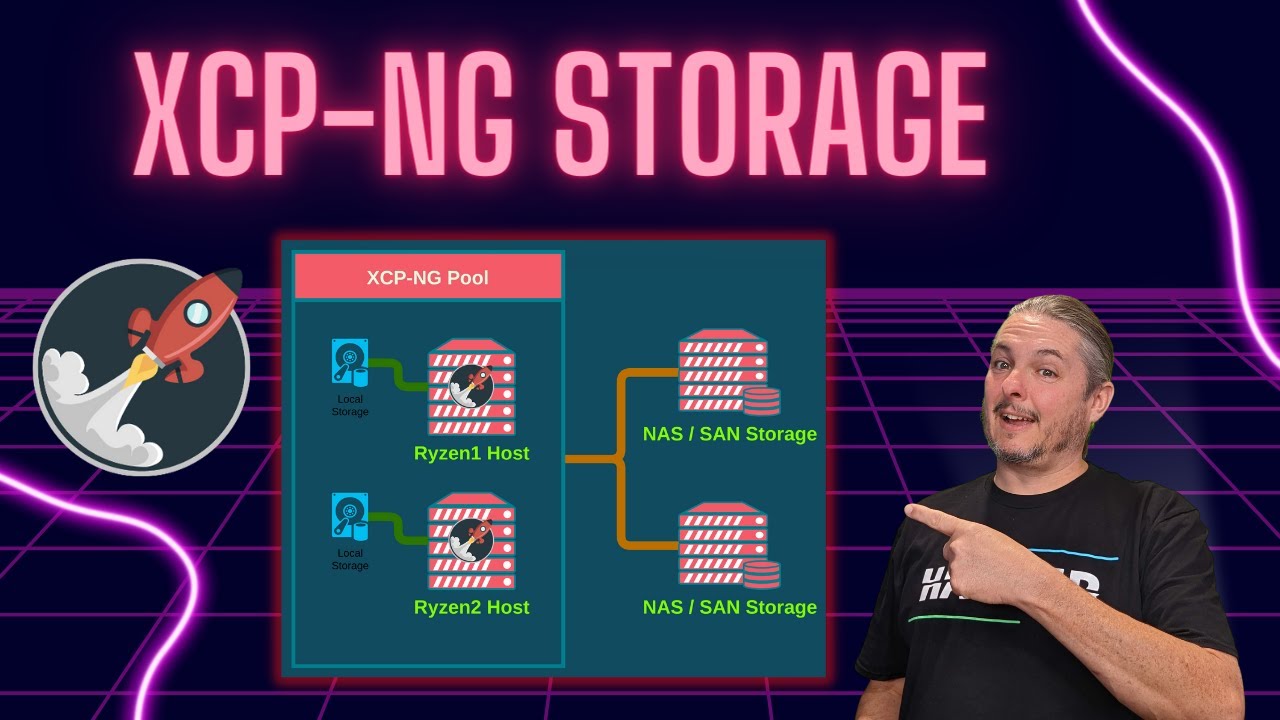

I have been looking at 45Drives and Synology. We are not a big company, under 20 people, however we really like the idea of XCP_ng having the virtual servers hosted by a central storage solution. We would like to order the new Lenovo server so they come with a minimum storage configuration. Maybe installing 2x M.3 drives simply for XCP_ng and then linking the virtual servers to the hosts with fiber. Then we can start to migrate everything over from ESXi onto XCP_ng servers.

What I’m looking for is a reasonable way to do this, without spending a lot of money. We are not a very big company, so I’m hoping there is a low cost way to do this. What I’m looking for is for suggestions on:

-

For the physical hardware Dell and Lenovo servers, what would be a good fiber storage card to purchase at a reasonable price?

-

What would folks recommend for the fiber switch? We wouldn’t need a very big one - we will have approximately 10x physical servers connected + the network storage.

-

And finally - what would you recommend as the best storage system to go with? I’m open to a number of things - including going TrueNAS if that makes sense, but to me, I think that means I have to buy another server and then install RAID5+ on that server. I’m just looking for something that is reasonably priced and will give us anywhere from 10TB to 30TB and multiple drives. I want the ability to not have to worry when a single drive goes bad - it’s just a matter of purchasing a new one. In this case, we normally purchase 3-6 extra drives at the time we purchase the server/storage device. I would like the ability to not have to worry about that in the future and just install any new drive the meets the same specs or better for the faulty drive. Any thoughts on that would be appreciated too!

I’m trying to just keep this simple and cost effective. We really like the idea of a central location for our virtual servers to be “stored” and move away from the physical servers storing the virtualization locally. The only other thing we would like is for the SAN/storage solution to have a SMB share or some type of folder where we can store ISOs our company uses and software backups.

I really appreciate everyone reading this and any suggestions that are made would be GREATLY APPRECIATED! THANK YOU SO MUCH!

Thanks again,

Neil