tl;dr - Moved to TrueNAS scale, had a few hiccups, and some pools had a negligible speed decrease or none. Supermicro SATA controller and Dell H200E were no change (within margin of error), and my IBM M1015 dropped ~50mbyte/s write ~100mbyte/s read.

Figured I would try, like others I am excited to test out containers on Scale. It was a bumpy road for me, did not have the luxury of migrating. So my experience is a fresh install and manual migration - which honestly wasn’t terrible.

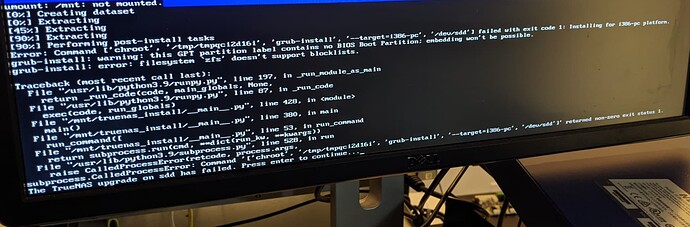

When trying the upgrade route, I kept getting the error: “Warning: this GPT partition label contains no BIOS boot partition; embedding won’t be possible” … “error: filesystem ‘cfs’ doesn’t support blocklists”

I tried in place without modifying the boot partition and letting the upgrade rewrite it. Same error both times.

Then I thought maybe it’s because I’m using a flash drive, so I removed a SLOG SSD from one of my pools and used it. Fresh install of TrueNAS Core > restore from backup > migrate to Scale. Same failure.

Then I thought, maybe because I’m using UEFI - went into bios, set it to legacy, re-installed Core and proceeded to try and upgrade to scale. Same error.

So I said might as well use the time and energy to just setting everything up manually, gave me a chance to learn where things were anyway.

I had some self-imposed problems: ESXI got pissy about iSCSI, possibly since the serial number or some other unique identifier changed but same volume names. Manually force-mounted them via CLI.

Before migrating I turned off all my containers that used NFS shares - but I did not umount the shares. After recreating the shares I kept getting error 521 which google didn’t help. Then I remembered I never umounted so I just rebooted the docker server.

When recreating my account on the Scale server, I couldn’t remember what my UID was, figured it was 1000 - I figured wrong. So I took this chance to just clean up my ACLs.

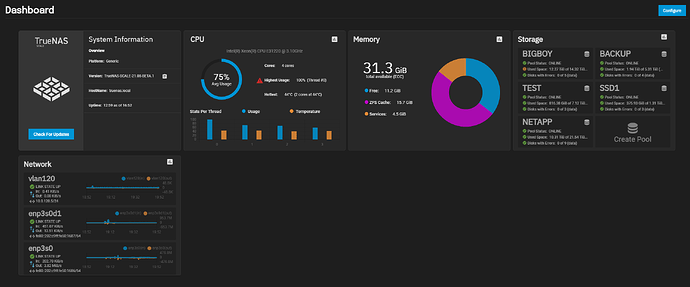

With ACLs fixed, NFS iSCSI and SMB shares recreated - I’m operational. I also did some before and after speed tests.

Server hardware:

Xeon E31220 x1

Supermicro X9SCM-f

32GB ECC (was only an extra $10 at the time)

Dell LSI 6Gbps SAS HBA Dual Port External 12DNW H200E

This connects to a NetApp DS4246 disk shelf

12x 4TB HGST SAS 7200rpm

IBM M1015

Forward breakout cables to SATA backplane

5x 4TB Segate trash (7200rpm?)

3x 3TB WD RED (5400rpm)

Local SATA controller

3x 500gb Samsung SSDs (870s? I forgot))

So I have 3 main pools each on different controllers, so I figured this would be a decent test. I just ran simple DD tests writing 0s to 100gb. Not looking for real world numbers, just something consistent to compare.

Pool 1: 5x 4TB RaidZ1 (IBM M1015) *This pool is 87% full

Before: 216mbyte/s Write, 294mbyte/s Read

After: 158mbyte/s Write, 177mbyte/s Read

Pool 2: 9x 4TB SAS drives RaidZ1 (Dell H200E)

Before: 463mbyte/s Write, 243mbyte/s Read

After: 512mbyte/s Write, 248mbyte/s Read

Pool 3: 3x SSDs Stripe, no parity (Supermicro SATA Controller)

Before: 1.25gbyte/s Write, 1.27gbyte/s Read

After: 1.3gbyte/s Write, 1.3 gbyte/s Read

DD command(s):

Write test: dd if=/dev/zero of=tmp.dat bs=2048k count=50k

Read test: dd if=tmp.dat of=/dev/null bs=2048k count=50k

lz4 Compression turned off.

More to write - will update later.