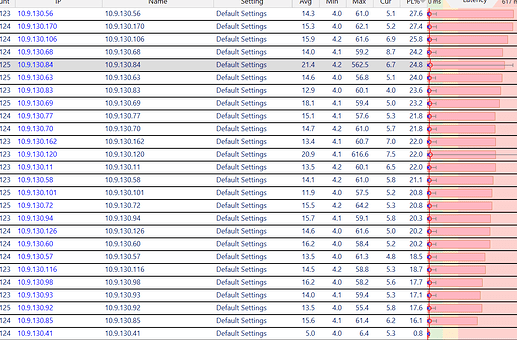

We have four locations. Only 1 location is experiencing heavy packet loss (10-15%) with particular phone VoIP deployments that have been in place for years.

We have narrowed the phones down on 1 switch, we have vlan’ed them and segmented them off in small groups, we have tried many different switches, even different brands. We have shut down the entire buildings electrical, and just fired up the switch to eliminate any interference.

We have replaced phones, we have replaced switches, we have reorganized the network… nothing fixed it. When we take the phone and shut off the native VLAN; the problem stops. But once we turn that on; its as-if the phone is listening to the native vlan and getting bogged down by something; even in small groups.

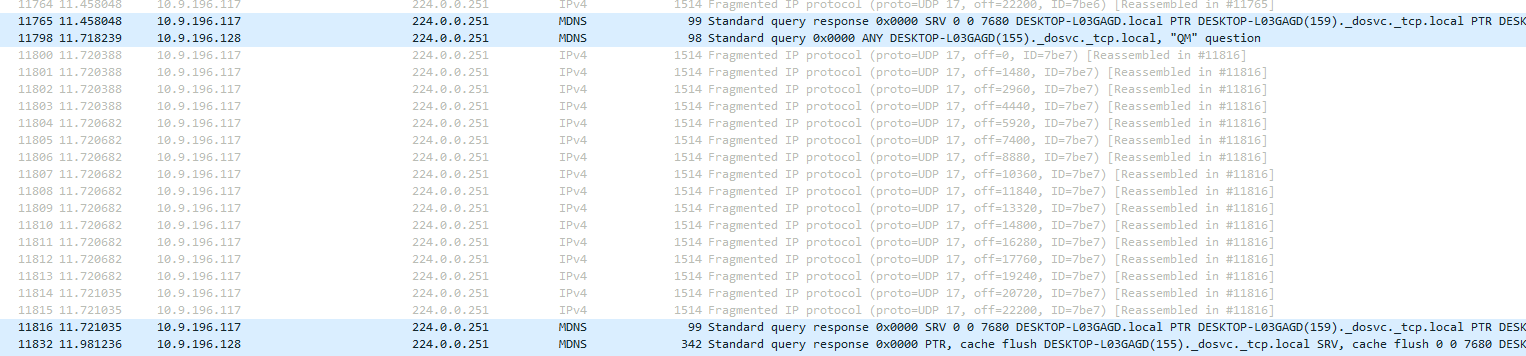

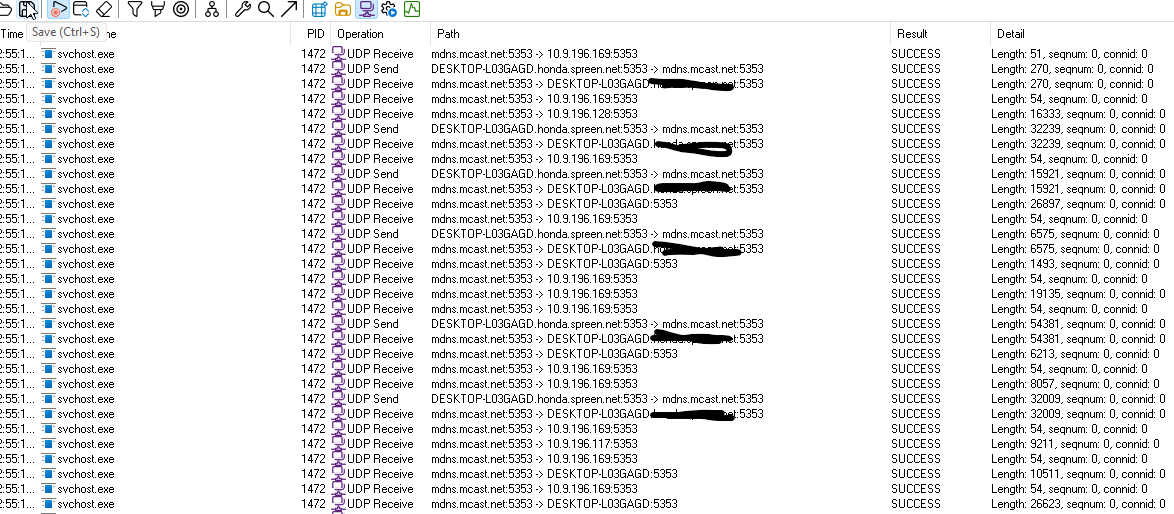

So then we have narrowed this down to a multicast storm. Particularly coming from the windows machines in the building. Not sure why Windows 11 would be so mDNS intensive, but just in some small tests we noticed gigabytes of data flowing out of client side across the network.

mDNS Traffic Spikes :More recently, I’ve noticed that mDNS traffic is going wild on my network, and I’m trying to understand why it’s happening and whether it’s contributing to the packet loss issues. I’ve read that mDNS is useful for local name resolution, but in my case, it seems to be generating excessive multicast traffic.

Why would these computers be calling out so much?

To reduce it; we started by eliminating ipv6 across those machines… that did not help; it just used ipv4, but it helped solve the problem because I could easily block on the switch itself using ACL commands to 224.0.0.251 port 5353. I have the commands if people want them; for Unifi USW 48 port poe switches… the documentation is not great for unifi.

But on another wing of the network; another building connected to fiber; we havent removed ipv6; but we see mdns coming over thru that; and I’ve attempted to block ipv6; but cant get to that granularly enough to be specific; instead I was blocking all UDP traffic on ipv6; and I just read thats not recommended.

Any thoughts on whats going on here? Why these windows machines are contributing to so much chatter and disruptive network packets that it causes phones to BOG down to the point they are dropping packets and sluggish.

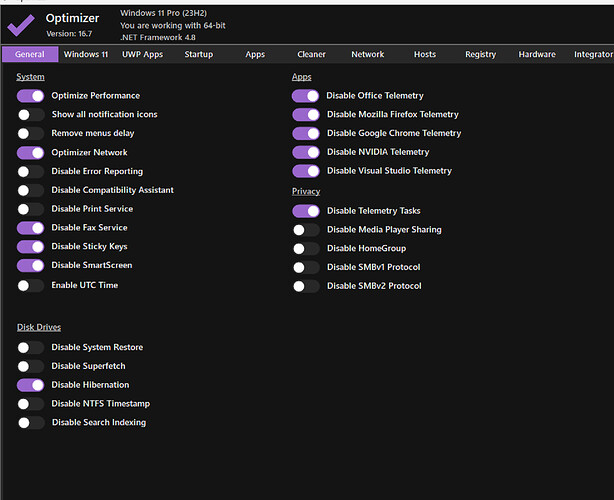

I think its pretty insane we have to block mDNS on the switch level or go to each pc; its easier to do with ipv4 than ipv6; to accomplish this we have to switch off ipv6 and just block ipv4 224.0.0.1 traffic; or shutdown the services on 100-200 machines 1 by 1.