Having some fun testing configurations in the Lab! Setup a good number of older Supermicro servers/nodes to play with XCP-NG. Each server has a single 64GB satadom, 2x400GB ssd drives, 4x1TB hdd spinning drives, 24+ cores, 128GB+ RAM, 2x1G ethernet and 2x10G ethernet.

Even though I have ZFS installed and working, I am reading here that it is still not “Officially Supported”.

If this is true … does anyone have an inkling of timeline when ZFS might become “Offically Supported”?

Thanks!

Mark

Because there is no hardware raid … also looking for any optimal configuration suggestions.

Here is the favourite XCP-NG configuration so far!

-

75GB mirror for XCP-NG (on the two SSD’s)

because satadom was noticibly slow and is a pain when it fails

-

100GB mirrored SLOG (on the two SSD’s)

helps to speed up writes to a ZFS hdd pool on the spinners

-

225GB mirrored ZFS pool

for any VM’s that need pure SSD. yes, it does compete for performance with the SLOG but seems to work very well for the most part.

-

ZFS striped mirror pool on the spinning hdds

-

10G network used heavily

ZFS is supported but the I think the official is missing due to the face that it’s not going managed or monitored in Xen Orchesta any time soon.

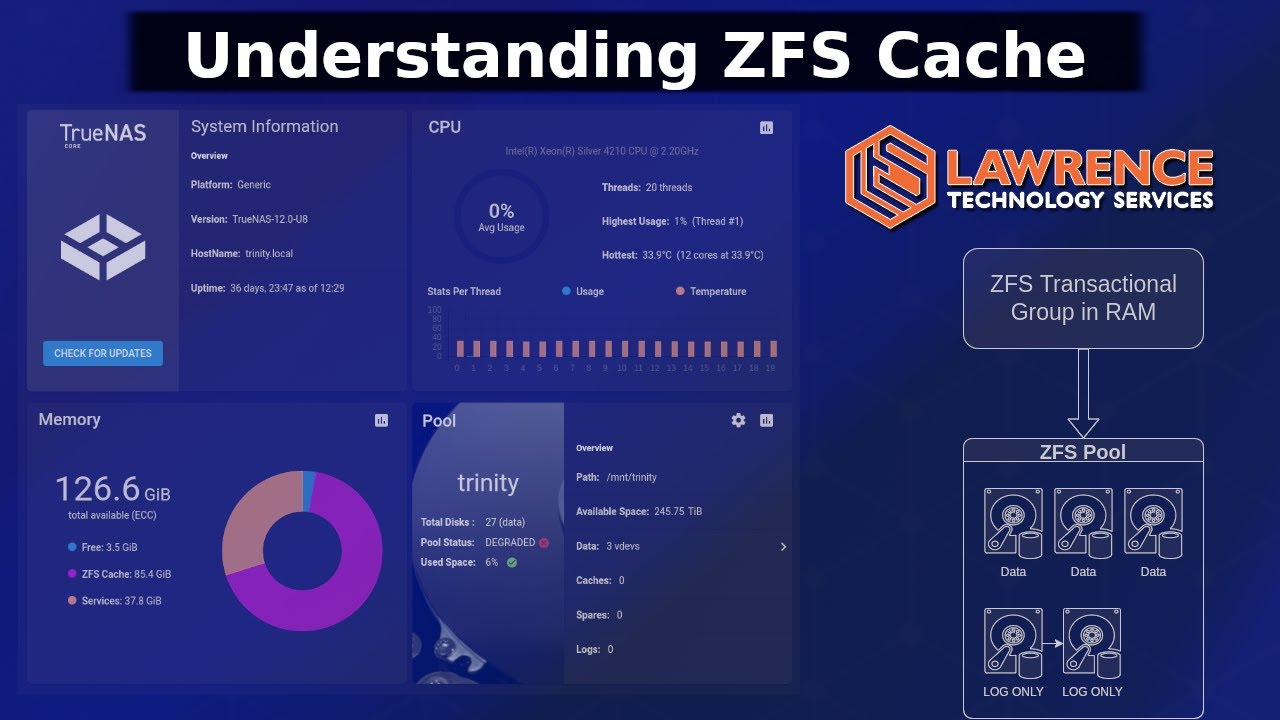

You probably don’t need SLOG just turn of sync for the pool. Here is a deeper dive if you want to understand ZFS caching better:

Thanks, and great video! Will test sync settings and report back if anything interesting surfaces.

Here are the test results. Not a perfect test but still somewhat interesting…

Without 100GB mirrored SLOG (two SSD’s)

sync=always

[Write]

SEQ 1MiB (Q= 1, T= 1): 25.116 MB/s [ 24.0 IOPS] < 41634.48 us>

RND 4KiB (Q= 1, T= 1): 0.368 MB/s [ 89.8 IOPS] < 10963.20 us>

sync=standard

[Write]

SEQ 1MiB (Q= 1, T= 1): 275.173 MB/s [ 262.4 IOPS] < 3802.44 us>

RND 4KiB (Q= 1, T= 1): 0.141 MB/s [ 34.4 IOPS] < 28762.23 us>

sync=disabled

[Write]

SEQ 1MiB (Q= 1, T= 1): 1649.127 MB/s [ 1572.7 IOPS] < 633.51 us>

RND 4KiB (Q= 1, T= 1): 30.104 MB/s [ 7349.6 IOPS] < 134.88 us>

**

With 100GB mirrored SLOG (two SSD’s)

**

sync=always

[Write]

SEQ 1MiB (Q= 1, T= 1): 279.417 MB/s [ 266.5 IOPS] < 3742.70 us>

RND 4KiB (Q= 1, T= 1): 7.822 MB/s [ 1909.7 IOPS] < 521.35 us>

sync=standard

[Write]

SEQ 1MiB (Q= 1, T= 1): 1710.350 MB/s [ 1631.1 IOPS] < 610.52 us>

RND 4KiB (Q= 1, T= 1): 29.454 MB/s [ 7190.9 IOPS] < 137.90 us>

sync=disabled

[Write]

SEQ 1MiB (Q= 1, T= 1): 1735.717 MB/s [ 1655.3 IOPS] < 602.00 us>

RND 4KiB (Q= 1, T= 1): 30.380 MB/s [ 7417.0 IOPS] < 133.69 us>

Yup, if you don’t have a SLOG then leave “sync=disabled”