My XCP-NG has an SSD for boot plus two 4TB drives in Raid1.

The Raid is mounted as /data01

Under that is a folder Local_ISO that is mounted as a local repository.

I have now added NexCloud in another VM and would like to have a folder called NextCloud_data accessible from that VM so NextCloud can use the raid for storage.

What is the correct way of doing it? I tried to create a new storage, but got and error saying it was in use.

If I understand you correctly, why not just create a virtual disk? Attach the vdisk to your vm, and it’ll be available for Next Cloud.

As long as your virtual disk resides on the storage repository of “Local Storage”, then it should reside on your RAID 1 array (assuming Local Storage is configured for your RAID array).

The raid has been created and formatted from the commandline of XCP-NG (not a VM, rather than the host OS).

The entire raid has been mounted as /data01.

One folder has already been created, and is used for the ISO repository. So I do not need any more ISO repositories.

What I need is to use another folder on the same raid as data folder for my NextCloud.

So I want to make separate folders on a raid where separate VM’s can use a folder as data storage.

I am NOT talking about storing the VM’s on the raid. They will stay on the SSD.

Basically the same way as Word, Excel and Powerpoint is installed on the C drive of a Windows PC, but each of them has a folder on a raid where they store their data.

Can a folder on a raid/drive be mounted as a virtual disk that can be used by a program that runs in a VM a storage? No messing with the drive itself - formatting etc. Just mounting.

XCP-NG is not designed to work that way as in it was not designed to offer up shared folders from the OS. That is more of a NAS / File server function.

Ok? So it does not matter how many drives I put on the server - the VM’s will never be able to use them? How on earth do you provide storage for the programs running in the VM’s?

Yes, I know the modern way of doing it is to have a storage box somewhere that you connect to, but there must be some way to use the local storage?

I do not have the money to buy a Dell box with a couple of 40 core Xeon’s and 10 TB of RAM plus a cabinet of a petabyte of NMVe drives to use as storage.

I have a computer with an SSD to use for the OS and VM’s and a couple of HD’s to use for the data.

Sorry for my confusion…

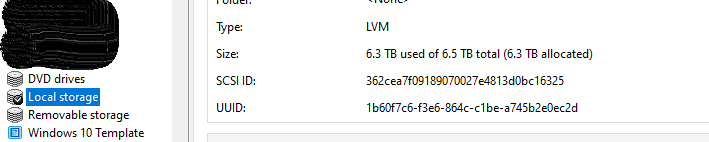

When you install XCP-NG, it should let you pick which drive to use for the OS, and which drive(s) to use for the VM storage. When selecting the drives for VM storage, you can generally pick multiple drives or if you’ve created a RAID1 array through a hardware controller, then it should show up as one volume. Either way, when you make your choice, after XCP-NG is installed, you should have a “Local Storage” storage repository, which will be all the drive(s) for VM storage added together. This should let you use as much of your local drives as you see fit.

For example, On one of my hosts, I have a 6.5TB RAID0 array (3 physical disks) configured in the Dell PERC which presents itself as one volume to XCP-NG. This is where ALL the VMs and attached virtual disks reside, which is “Local Storage”. There is also a single 300GB drive that is used for the OS, and there is nothing to do with VMs that live on this volume, it is strictly XCP-NG data that makes it run.

Well, my idea was to use the rest of the SSD as storage for the VM’s to make sure it is as fast as possible.

But the data (images, music,video) does not need that kind of speed. So I want to use the HD’s for that.

My logic is that every OS is a file server. You do not need NAS software to set up a raid and serve it up via Samba or NFS…

XCP-NG and most (if not all) true hypervisors don’t really have that as a concept. They’re meant to virtualize, not be a NAS.

What you could do is the create a storage repository on the HDDs and create data VHDs on there for bulk data storage on a VM. Use that VM as a file server through SMB / NFS.

1 Like

I will definitely try out that idea!

You said that you added the Raid1 from the command line. What command did you use? You absolutely can use local storage for VM’s it just sounds like you might be going about it the wrong way.

Assuming you have Xen Orchestra installed…

If you go to home > hosts > your host > storage

Do you see your Raid1 device in use?

If you do then you should be able to select it, goto disks, + New Disk, make the disk, then goto your vm and attach the disk.

If not then you need to create a Storage Repository. If you manually partitioned, formatted and mounted the disk, then added a folder within it as an ISO store then the same (ish) process might work to add it as ext(local) but maybe call it “VM Storage” rather than “Nextcloud…” as you will be using that for all VM’s that need a disk. Be aware though that this is not a “normal” way to do it (IMHO).

If you are able to, I would remove the ISO storage in XO then unmount and remove the partitions, then make a new partition using only the size you need for ISO’s (100gb maybe?) and another partition using the remainder of the disk. Format and mount the first partition and add it back via XO as ISO then add the second partition (no formatting or mounting required) as an ext(local) via XO.

Remember to add the ISO storage to fstab and you will need to reboot before the “proper” size shows in XO

You could checkout this video that I made which covers most of this. It’s the second time today I’ve posted this in an answer! perfect timing!!!

1 Like

I used mdadm to create the raid, mounted it as data01 and it is added in fstab. I added the ISO folder that I created as a repository from XO.

The point now is that I do NOT want to store VM’s on this raid. Only data for the different programs that are running in the VM’s. It does not make any sense to store the VMs on a HD when I have more than enough space on the SSD… I might have to move the ISO storage to the SSD and make a small VM to share the HD via Samba.

You could do that but you are making it hard work for yourself.

You make the VM Operating system disk on the SSD backed SR (as you are doing)

You then make the VM Storage disk on the HDD backed SR

I run owncloud not nextcloud but in this (nearly) exact same setup. XCP-ng + OS disks are on SSD based storage, the data is in a separate disk on a separate SR backed by a raid5 array.

Putting the ISO’s on the SSD’s is also a total waste of fast disks for storage that is a/ read only and b/ rarely used.

True. But if I have to do that to free up the raid so I can use it for data storage, I will do that.

Let me clarify one thing. I really want to keep the VM’s on the SSD. I want to keep that speed advantage. So the VM with NextCloud stays there. But I want the data - pictures, videos - that I add to NextCloud to end up on the raid. I have a little Raspberry Pi 3 on my desk here that is set up exactly like that, but without the VM’s.

It is just installed as an iso to the SD-card, but the data is on an external USB drive.

I understand what you mean however I think (and please don’t take this the wrong way) that you are fundamentally misunderstanding how the storage works.

You don’t have to do that to free up the raid, you make 2 partitions, one for ISO’s one for data, add the ISO one as you have previously, add the second as a ext(local) SR then create the disk within that SR.

If you want the entire raid1 to be used by nextcloud then what you really want to do is have it setup as a passthrough disk. I have no experience of doing this myself but I understand it can be done.

If however you want several different machines to be able to use “bits” of the raid1 then you need to add it as a SR and create a virtual disk that you then attach to the VM.

Of course, I could resize the existing partition and create a new one. And yes, I have thought about using the whole raid or a whole partition as storage. A passthrough disk sounds interesting. I tried to research that some days ago, but could not find anything that made sense.

But if it is possible to just make a drive that can be attached to a VM, this would probably be the best.

Although - I tried to do something like that, and got a message that the drive was in use. So I am not sure what I did wrong.

On a standalone hypervisor you generally have 2 pools:

- OS (RAID1 / Mirror)

- Data (RAID10/50/60 / ZFS equivalents)

In your case it sounds like you want the actual OSs of your VMs to reside on SSD with your OS, which is fine in XCP-NG, and have the Data live exclusively on the HDDs.

In this case as I had mentioned the easiest solution is to create a storage VM based on whatever you want.

It looks like you can hard disk passthrough, but I believe the disks need to be unconfigured in XCP-NG which would mean you’d want to remove your MDraid. Tom actually has a video on this using XoA https://youtu.be/vSDDMIG6Huk

From here, you could hypothetically attach those to a TrueNAS VM and use that for bulk storage as SMB or NFS shares.

3 Likes

that’s a good solution @gsrfan01.

Of course the “you don’t virtualise trunas/pfsense” police won’t like it but it should be absolutely fine.

It’s not my favorite situation, but in a lab there’s worse configs. Passing through hardware to a VM is preferable IMO compared to people making single disk RAID0s on RAID cards to get past not having an HBA.

Even PFsense with a passed through physical NIC isn’t half bad. Being able to passthrough hardware mitigates a lot of the weird bugs people run into. Netgate even has official documentation for PFsense Plus in Azure, and loads of datacenter customers use PFsense virtualized for their infrastructure.

Running these roles as discreet devices is preferred but not always possible in a lab setting.