Hi all!

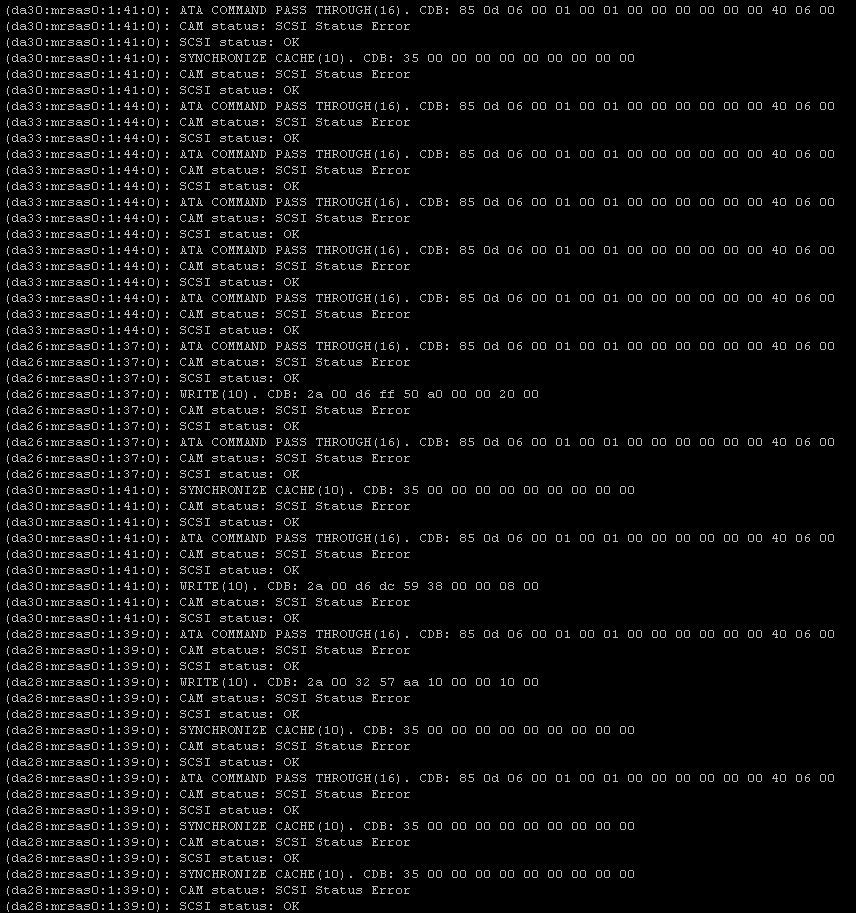

So i have a particular hdd that dropped out of the pool for the 2nd time. At 1st i suspected its not the hdd so i swapped it into an another bay. It dropped out again, and this is what i get in the log:

2021-02-24,16:29:12,Information,zenifer,daemon,1,“2021-02-24T16:29:12.880074+01:00 zenifer.local smartd 2235 - - Device: /dev/da2 [SAT], SMART Usage Attribute: 195 Hardware_ECC_Recovered changed from 5 to 6”

2021-02-24,16:29:12,Information,zenifer,daemon,1,“2021-02-24T16:29:12.880031+01:00 zenifer.local smartd 2235 - - Device: /dev/da2 [SAT], SMART Prefailure Attribute: 1 Raw_Read_Error_Rate changed from 76 to 77”

2021-02-24,16:29:12,Information,zenifer,daemon,1,“2021-02-24T16:29:12.746831+01:00 zenifer.local smartd 2235 - - Device: /dev/da2 [SAT], SMART Usage Attribute: 195 Hardware_ECC_Recovered changed from 5 to 6”

2021-02-24,16:29:12,Information,zenifer,daemon,1,“2021-02-24T16:29:12.746784+01:00 zenifer.local smartd 2235 - - Device: /dev/da2 [SAT], SMART Prefailure Attribute: 1 Raw_Read_Error_Rate changed from 76 to 77”

2021-02-24,16:28:01,Notice,zenifer,user,(da2,mps0:0:4:0): Invalidating pack

2021-02-24,16:28:01,Notice,zenifer,user,(da2,“mps0:0:4:0): Error 6, Retries exhausted”

2021-02-24,16:28:01,Notice,zenifer,user,(da2,“mps0:0:4:0): SCSI sense: UNIT ATTENTION asc:29,0 (Power on, reset, or bus device reset occurred)”

2021-02-24,16:28:01,Notice,zenifer,user,(da2,mps0:0:4:0): SCSI status: Check Condition

2021-02-24,16:28:01,Notice,zenifer,user,(da2,mps0:0:4:0): CAM status: SCSI Status Error

2021-02-24,16:28:01,Notice,zenifer,user,(da2,mps0:0:4:0): SYNCHRONIZE CACHE(10). CDB: 35 00 00 00 00 00 00 00 00 00

2021-02-24,16:28:01,Information,zenifer,daemon,1,2021-02-24T15:28:01.315566+00:00 zenifer.local zfsd 480 - - CaseFile::ActivateSpare: No spares available for pool Pool1

2021-02-24,16:28:01,Information,zenifer,daemon,1,2021-02-24T15:28:01.312660+00:00 zenifer.local zfsd 480 - - Evaluating existing case file

2021-02-24,16:28:01,Information,zenifer,daemon,1,2021-02-24T15:28:01.312656+00:00 zenifer.local zfsd 480 - - ZFS: Notify class=resource.fs.zfs.statechange eid=43 pool=Pool1 pool_context=0 pool_guid=1412680188277862934 pool_state=0 subsystem=ZFS time=00000016141804810000000312127240 timestamp=1614180481 type=resource.fs.zfs.statechange vdev_guid=8125723354094972555 vdev_laststate=7 vdev_path=/dev/gptid/9e4fe7cd-ecf7-11ea-9084-619fd2766c53 vdev_state=5 version=0

2021-02-24,16:28:01,Information,zenifer,daemon,1,2021-02-24T15:28:01.312614+00:00 zenifer.local zfsd 480 - - Evaluating existing case file

2021-02-24,16:28:01,Information,zenifer,daemon,1,2021-02-24T15:28:01.312596+00:00 zenifer.local zfsd 480 - - ZFS: Notify class=ereport.fs.zfs.probe_failure eid=42 ena=15100932523792807937 parent_guid=16567476538320923632 parent_type=raidz pool=Pool1 pool_context=0 pool_failmode=continue pool_guid=1412680188277862934 pool_state=0 prev_state=0 subsystem=ZFS time=00000016141804810000000312127240 timestamp=1614180481 type=ereport.fs.zfs.probe_failure vdev_ashift=9 vdev_cksum_errors=0 vdev_complete_ts=14401371494652 vdev_delays=0 vdev_delta_ts=7174 vdev_guid=8125723354094972555 vdev_path=/dev/gptid/9e4fe7cd-ecf7-11ea-9084-619fd2766c53 vdev_read_errors=3 vdev_spare_guids= vdev_type=disk vdev_write_errors=154

2021-02-24,16:28:01,Information,zenifer,daemon,1,2021-02-24T15:28:01.166235+00:00 zenifer.local zfsd 480 - - Evaluating existing case file

2021-02-24,16:28:01,Information,zenifer,daemon,1,2021-02-24T15:28:01.166232+00:00 zenifer.local zfsd 480 - - ZFS: Notify class=ereport.fs.zfs.probe_failure eid=41 ena=15100776839954565121 parent_guid=16567476538320923632 parent_type=raidz pool=Pool1 pool_context=0 pool_failmode=continue pool_guid=1412680188277862934 pool_state=0 prev_state=0 subsystem=ZFS time=00000016141804810000000163928654 timestamp=1614180481 type=ereport.fs.zfs.probe_failure vdev_ashift=9 vdev_cksum_errors=0 vdev_complete_ts=14401223009959 vdev_delays=0 vdev_delta_ts=59271 vdev_guid=8125723354094972555 vdev_path=/dev/gptid/9e4fe7cd-ecf7-11ea-9084-619fd2766c53 vdev_read_errors=3 vdev_spare_guids= vdev_type=disk vdev_write_errors=0

2021-02-24,16:28:01,Information,zenifer,daemon,1,2021-02-24T15:28:01.166204+00:00 zenifer.local zfsd 480 - - Evaluating existing case file

2021-02-24,16:28:01,Information,zenifer,daemon,1,2021-02-24T15:28:01.166200+00:00 zenifer.local zfsd 480 - - ZFS: Notify class=ereport.fs.zfs.io eid=40 ena=15100776820645038081 parent_guid=16567476538320923632 parent_type=raidz pool=Pool1 pool_context=0 pool_failmode=continue pool_guid=1412680188277862934 pool_state=0 subsystem=ZFS time=00000016141804810000000163928654 timestamp=1614180481 type=ereport.fs.zfs.io vdev_ashift=9 vdev_cksum_errors=0 vdev_complete_ts=14401223009959 vdev_delays=0 vdev_delta_ts=59271 vdev_guid=8125723354094972555 vdev_path=/dev/gptid/9e4fe7cd-ecf7-11ea-9084-619fd2766c53 vdev_read_errors=2 vdev_spare_guids= vdev_type=disk vdev_write_errors=0 zio_delay=58250 zio_delta=59231 zio_err=6 zio_flags=723137 zio_offset=9998683348992 zio_pipeline=17301504 zio_priority=0 zio_size=8192 zio_stage=16777216 zio_timestamp=14401222950688

2021-02-24,16:28:01,Information,zenifer,daemon,1,2021-02-24T15:28:01.166167+00:00 zenifer.local zfsd 480 - - Evaluating existing case file

2021-02-24,16:28:01,Information,zenifer,daemon,1,2021-02-24T15:28:01.166161+00:00 zenifer.local zfsd 480 - - ZFS: Notify class=ereport.fs.zfs.io eid=39 ena=15100776798994040833 parent_guid=16567476538320923632 parent_type=raidz pool=Pool1 pool_context=0 pool_failmode=continue pool_guid=1412680188277862934 pool_state=0 subsystem=ZFS time=00000016141804810000000163928654 timestamp=1614180481 type=ereport.fs.zfs.io vdev_ashift=9 vdev_cksum_errors=0 vdev_complete_ts=14401222990202 vdev_delays=0 vdev_delta_ts=13084 vdev_guid=8125723354094972555 vdev_path=/dev/gptid/9e4fe7cd-ecf7-11ea-9084-619fd2766c53 vdev_read_errors=1 vdev_spare_guids= vdev_type=disk vdev_write_errors=0 zio_delay=42159 zio_delta=43552 zio_err=6 zio_flags=723137 zio_offset=9998683086848 zio_pipeline=17301504 zio_priority=0 zio_size=8192 zio_stage=16777216 zio_timestamp=14401222945989

2021-02-24,16:28:01,Information,zenifer,daemon,1,2021-02-24T15:28:01.166013+00:00 zenifer.local zfsd 480 - - Vdev State = ONLINE

2021-02-24,16:28:01,Information,zenifer,daemon,1,“2021-02-24T15:28:01.165932+00:00 zenifer.local zfsd 480 - - CaseFile(1412680188277862934,8125723354094972555,)”

2021-02-24,16:28:01,Information,zenifer,daemon,1,2021-02-24T15:28:01.165904+00:00 zenifer.local zfsd 480 - - Creating new CaseFile:

2021-02-24,16:28:01,Notice,zenifer,user,(da2,“mps0:0:4:0): Retrying command, 0 more tries remain”

2021-02-24,16:28:01,Notice,zenifer,user,(da2,mps0:0:4:0): CAM status: Command timeout

2021-02-24,16:28:01,Notice,zenifer,user,(da2,mps0:0:4:0): SYNCHRONIZE CACHE(10). CDB: 35 00 00 00 00 00 00 00 00 00

2021-02-24,16:28:01,Notice,zenifer,user,mps0,Unfreezing devq for target ID 4

2021-02-24,16:28:01,Notice,zenifer,user,mps0,Finished abort recovery for target 4

2021-02-24,16:28:01,Notice,zenifer,user, (da2,mps0:0:4:0): SYNCHRONIZE CACHE(10). CDB: 35 00 00 00 00 00 00 00 00 00 length 0 SMID 1070 Aborting command 0xfffffe010dcdfdd0

2021-02-24,16:28:01,Notice,zenifer,user,mps0,Sending abort to target 4 for SMID 1070

2021-02-24,16:28:01,Notice,zenifer,user, (da2,“mps0:0:4:0): SYNCHRONIZE CACHE(10). CDB: 35 00 00 00 00 00 00 00 00 00 length 0 SMID 1070 Command timeout on target 4(0x000d) 60000 set, 60.49875710 elapsed”

Because the error still appeared on the same hdd even though its in a different slot i suspect something is iffy with the hdd. Can i assume it is just a case of a dying hdd?

Thanks in advance!