I would start a new thread and if you are using the file I provided it should just work for the persistent data.

Thanks for the great video tutorial!

Just wanted to share, that I used remote IP address in the rules (gl2_remote_ip) - I found it easier to use compared to the confusing ID.

For example, if your pfsense (or any other syslog client) is sending with the source IP address 192.168.1.1, the rule is:

gl2_remote_ip must match exactly 192.168.1.1

I ran into similar problem with AVX support - was using Ubuntu VM on a QNAP NAS Server.

By default the QNAP Virtualization Station (KVM-based Hypervizor) had Intel Core i7 (Westmere), which triggered the mongodb issue you described above.

Changing to Passthrough and hiding KVM HV Signature fixed the problem with mongodb.

So, if your server not too old, you could run nested virtualization - create ubuntu VM and run docker install there.

Very good tutorial. I used parts to stand up Graylog over the weekend. I used the sample yaml from Graylog’s site and then tweaked with features from your copy. When creating regular expressions for extractors looking for a good regular expression tool to test out patterns. I used to have one years ago but that is long lost. Any suggestions?

ChatGPT can be used to take a log file and then be asked to create the extractor specifically for GrayLog.

The prompt I used was:

Create a graylog grok extractor to parse suricata data from pfsense using this example message:

and then I would put in a sample message in and it would spit out the GROK (or Regex).

Thanks Tom, seems like cheating but hey if it works I will give it a try. Once I got your prfsense log extractors working I decided to bring another system into graylog that doesn’t have an extractor library on graylog forums.

I did a trial with this:

Create a graylog grok extractor to parse suricata data from pfsense using this example message: filterlog[84373]: 6,1000000105,lagg0.15,match,block,in,6,0x00,0x9e34e,255,UDP,17,53,fe80::aa65:9ba7:16a0:2c36,ff02::fb,5353,5353,53

The response was this looks like I can just copy and past the solution. ![]()

Here is a grok pattern that you can use to parse the given suricata data from pfsense:

%{WORD:filterlog}[%{INT:filterlog_id}]: %{INT:rule},%{INT:sub_rule},%{DATA:interface},%{WORD:reason},%{WORD:action},%{WORD:direction},%{INT:ip_version},0x%{BASE16NUM:tos},0x%{BASE16NUM:ecn},%{INT:ttl},%{WORD:protocol},%{INT:protocol_id},%{INT:length},%{IPV6:src_ip},%{IPV6:dst_ip},%{INT:src_port},%{INT:dst_port},%{INT:data_length}

RegExRX on MacOS is pretty good.

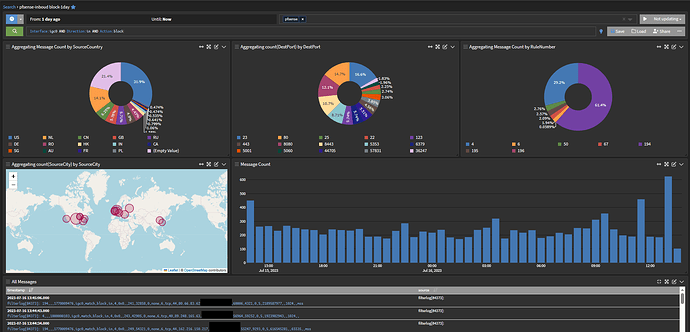

I was able to adjust extractors for IPv6 and IPv4 running and then created a Geo lookup tables based on the Graylog blog:

I have a new search dashboard. All I have left is how to translate rules from pfsense from their numerical numbers to actual literals:

Thanks for this guide.

I’ve had no success getting the messages to be logged into graylog until i switched pfsense’s Log Message Format to syslog(RFC 5424, with RFC 3339 microsecond-precision timestamps)

After that, it just worked.

@LTS_Tom How did you implement the last part with the openvpn login traps?

Is there a more detailed explanation?

I am trying to get the alerts and events definition working

Thank you in advance

For the event definition:

Filter & Aggregation

Type

Filter

Search Query

"Peer Connection Initiated with"

Streams

LTS_Pfsense

Search within

1 minutes

Execute search every

1 minutes

Enable scheduling

yes

Event limit

0

For the notification:

${if backlog}

${foreach backlog message}

OpenVPN User: ${message.fields.client_username} Logged In via : ${message.fields.client_ip} at ${event.timestamp}

${end}

${end}

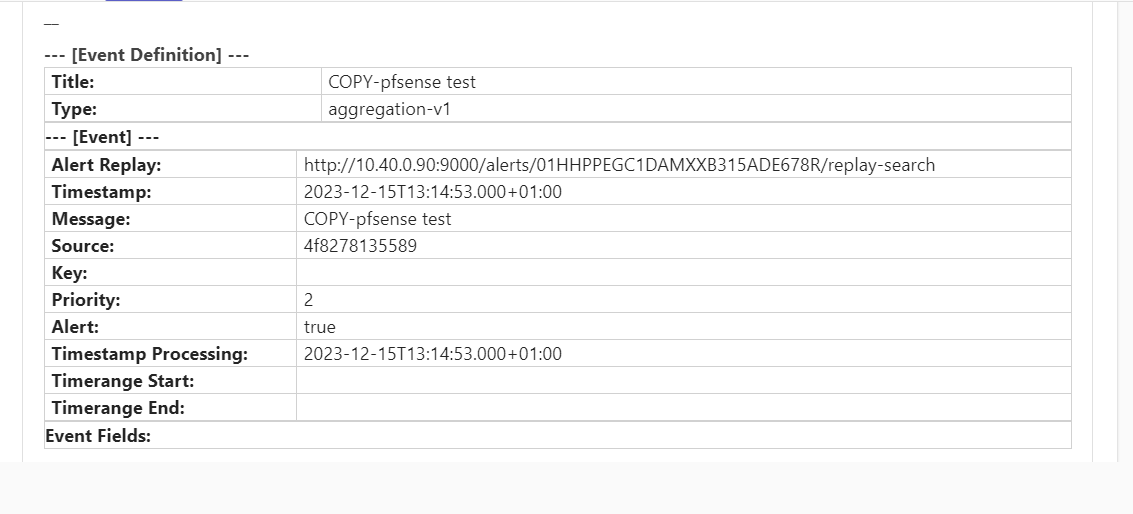

Hi it does not give the result that i would expect ![]()

in my case im sending to teams

<b>--- [Event Definition] ---</b>

<table>

<tr><td><b>Title:</b></td><td>${event_definition_title}</td></tr>

<tr><td><b>Type:</b></td><td>${event_definition_type}</td></tr>

<table>

<b>--- [Event] ---</b>

<table>

<tr><td><b>Alert Replay:</b></td><td>${http_external_uri}alerts/${event.id}/replay-search</td></tr>

<tr><td><b>Timestamp:</b></td><td>${event.timestamp}</td></tr>

<tr><td><b>Message:</b></td><td>${event.message}</td></tr>

<tr><td><b>Source:</b></td><td>${event.source}</td></tr>

<tr><td><b>Key:</b></td><td>${event.key}</td></tr>

<tr><td><b>Priority:</b></td><td>${event.priority}</td></tr>

<tr><td><b>Alert:</b></td><td>${event.alert}</td></tr>

<tr><td><b>Timestamp Processing:</b></td><td>${event.timestamp}</td></tr>

<tr><td><b>Timerange Start:</b></td><td>${event.timerange_start}</td></tr>

<tr><td><b>Timerange End:</b></td><td>${event.timerange_end}</td></tr>

<table>

<b>Event Fields:</b>

<table>

${foreach event.fields field}

<tr><td><b>${field.key}:</b></td><td>${field.value}</td></tr>

${end}

</table>

${if backlog}

<b>--- [Backlog] ---</b>

${foreach backlog message}

<p><code>OpenVPN User: ${message.fields.client_username} Logged In via : ${message.fields.client_ip} at ${event.timestamp}</code></p>

${end}${end}

what am i doing wrong?

the result in teams is like:

Not sure, have not tested it with teams. Are you sure the alert had an openVPN log in to pull the data from?

You need to set the backlog to 1 in order to display the log data

let my try to find that setting thnx

Can anyone help me with this error?

Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: error setting rlimits for ready process: error setting rlimit type 8: operation not permitted: unknown

This is my docker-compose.yml:

version: '3'

networks:

graynet:

driver: bridge

# This is how you persist data between container restarts

volumes:

mongo_data:

driver: local

log_data:

driver: local

graylog_data:

driver: local

services:

# Graylog stores configuration in MongoDB

mongo:

image: mongo:6.0.5-jammy

container_name: mongodb

volumes:

- "mongo_data:/data/db"

networks:

- graynet

restart: unless-stopped

# The logs themselves are stored in Opensearch

opensearch:

image: opensearchproject/opensearch:2

container_name: opensearch

environment:

- "OPENSEARCH_JAVA_OPTS=-Xms1g -Xmx1g"

- "bootstrap.memory_lock=true"

- "discovery.type=single-node"

- "action.auto_create_index=false"

- "plugins.security.ssl.http.enabled=false"

- "plugins.security.disabled=true"

volumes:

- "log_data:/usr/share/opensearch/data"

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536

hard: 65536

ports:

- 9200:9200/tcp

networks:

- graynet

restart: unless-stopped

graylog:

image: graylog/graylog:5.1

container_name: graylog

environment:

# CHANGE ME (must be at least 16 characters)!

GRAYLOG_PASSWORD_SECRET: "...."

# Password: admin

GRAYLOG_ROOT_PASSWORD_SHA2: "..."

GRAYLOG_HTTP_BIND_ADDRESS: "0.0.0.0:9000"

GRAYLOG_HTTP_EXTERNAL_URI: "http://localhost:9000/"

GRAYLOG_ELASTICSEARCH_HOSTS: "http://opensearch:9200"

GRAYLOG_MONGODB_URI: "mongodb://mongodb:27017/graylog"

GRAYLOG_TIMEZONE: "Europe/London"

TZ: "Europe/London"

GRAYLOG_TRANSPORT_EMAIL_PROTOCOL: "smtp"

GRAYLOG_TRANSPORT_EMAIL_WEB_INTERFACE_URL: "http://192.168.20.13:9000/"

GRAYLOG_TRANSPORT_EMAIL_HOSTNAME: "outbound.mailhop.org"

GRAYLOG_TRANSPORT_EMAIL_ENABLED: "true"

GRAYLOG_TRANSPORT_EMAIL_PORT: "587"

GRAYLOG_TRANSPORT_EMAIL_USE_AUTH: "true"

GRAYLOG_TRANSPORT_EMAIL_AUTH_USERNAME: "xxxxx"

GRAYLOG_TRANSPORT_EMAIL_AUTH_PASSWORD: "xxxxx"

GRAYLOG_TRANSPORT_EMAIL_USE_TLS: "true"

GRAYLOG_TRANSPORT_EMAIL_USE_SSL: "false"

GRAYLOG_TRANSPORT_FROM_EMAIL: "graylog@example.com"

GRAYLOG_TRANSPORT_SUBJECT_PREFIX: "[graylog]"

entrypoint: /usr/bin/tini -- wait-for-it opensearch:9200 -- /docker-entrypoint.sh

volumes:

- "${PWD}/config/graylog/graylog.conf:/usr/share/graylog/config/graylog.conf"

- "graylog_data:/usr/share/graylog/data"

networks:

- graynet

restart: always

depends_on:

opensearch:

condition: "service_started"

mongo:

condition: "service_started"

ports:

- 9000:9000/tcp # Graylog web interface and REST API

- 1514:1514/tcp # Syslog

- 1514:1514/udp # Syslog

- 12201:12201/tcp # GELF

- 12201:12201/udp # GELF

Are you trying to run docker on an LXC container?

Yes I am, is it possible to do that or must it be a VM? I have some other services running on docker in LXC containers.

Its not recommended to run docker on LXC containers. I have ran into so many issues with it and after reading articles on it, docker on LXC might work for some containers, but not good for production. Personally I would go fat VM for docker.