Hi there!

I’ve recently finished a new hardware build. Everything works fine, except TrueNAS that is crashing after running a few days.

I’m running my boot-pool out of single NVME drive (Transcend 128GB Nvme PCIe Gen3 X4 MTE110S M.2 SSD Solid State Drive TS128GMTE110S if that matters).

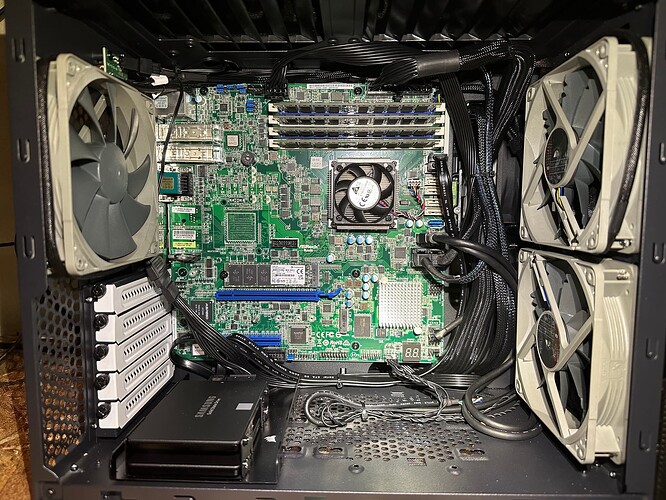

HW build details:

- Fractal Design Node 804 Black Window Aluminum/Steel Micro ATX Cube Computer Case 1

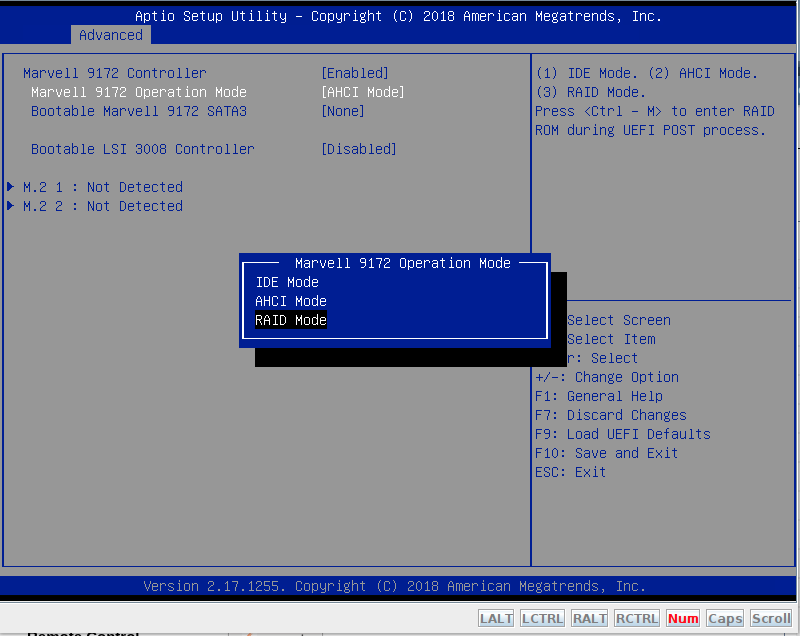

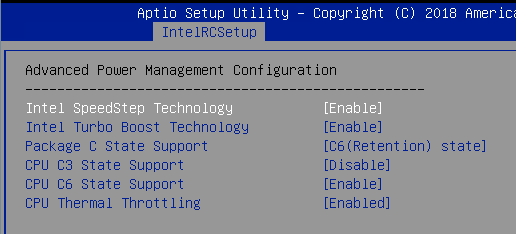

- Asrock Rack D1541D4U-2O8R Server Motherboard Intel Xeon D1541 SFP DDR4 ECC DIMM 1

- WD Red Plus 12TB NAS Hard Disk Drive - 7200 RPM Class SATA 6Gb/s, CMR, 256MB Cache, 3.5 Inch - WD120EFBX 8

- CORSAIR - RMe Series RM750e 80 PLUS Gold Fully Modular Low-Noise ATX 3.0 and PCIE 5.0 Power Supply - Black 1

- Corsair Dual SSD Mounting Bracket (3.5” Internal Drive Bay to 2.5", Easy Installation) Black 1

- Corsair CP-8920186 Premium Individually Sleeved SATA Cable, Black PSUs 29.5 inches 1

- SAMSUNG Electronics 870 EVO 2TB 2.5 Inch SATA III Internal SSD (MZ-77E2T0B/AM) 2

- Noctua NF-A14 iPPC-2000 PWM, Heavy Duty Cooling Fan, 4-Pin, 2000 RPM (140mm, Black) 1

- Transcend 128GB Nvme PCIe Gen3 X4 MTE110S M.2 SSD Solid State Drive TS128GMTE110S 1

- Noctua NF-P12 redux-1700 PWM, High Performance Cooling Fan, 4-Pin, 1700 RPM (120mm, Grey), Compatible with Desktop 3

When a crash occurs, I see the following in the syslog:

2023-08-28T16:34:14.000-07:00 truenas zed[2303824]: eid=220 class=io_failure pool=‘boot-pool’

2023-08-28T16:34:14.000-07:00 truenas zed[2303822]: eid=219 class=io_failure pool=‘boot-pool’

2023-08-28T16:34:14.000-07:00 truenas kernel: WARNING: Pool ‘boot-pool’ has encountered an uncorrectable I/O failure and has been suspended.

2023-08-28T16:34:14.000-07:00 truenas zed[2303820]: eid=218 class=data pool=‘boot-pool’ priority=0 err=6 flags=0x8881 bookmark=158:59988:0:0

2023-08-28T16:34:14.000-07:00 truenas zed[2303817]: eid=217 class=data pool=‘boot-pool’ priority=0 err=6 flags=0x808881 bookmark=158:54802:0:0

2023-08-28T16:34:14.000-07:00 truenas kernel: WARNING: Pool ‘boot-pool’ has encountered an uncorrectable I/O failure and has been suspended.

2023-08-28T16:34:14.000-07:00 truenas kernel: zio pool=boot-pool vdev=/dev/nvme0n1p3 error=5 type=1 offset=11820916736 size=12288 flags=180880

2023-08-28T16:34:14.000-07:00 truenas kernel: nvme0n1: detected capacity change from 250069680 to 0

2023-08-28T16:34:14.000-07:00 truenas kernel: nvme nvme0: Removing after probe failure status: -19

2023-08-28T16:34:14.000-07:00 truenas kernel: nvme 0000:08:00.0: can’t change power state from D3cold to D0 (config space inaccessible)

2023-08-28T16:34:14.000-07:00 truenas kernel: nvme nvme0: controller is down; will reset: CSTS=0xffffffff, PCI_STATUS=0xffff

Is this just a sign of a buggy NVME or there’s something else at play?

I have two NVME slots on the motherboard - one of them for the boot device, another one is currently housing Intel Optane for LOG for the spinning rust pool (not shown on the pictures below, haven’t had a chance to take the updated one yet):