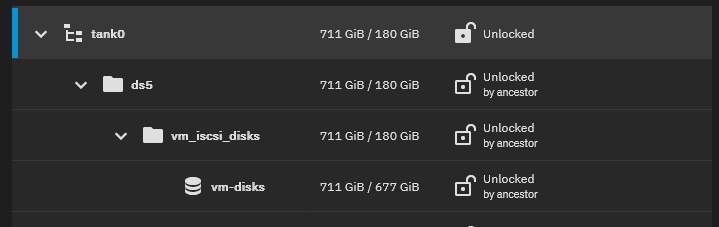

I am trying to delete a pool consisting of two datasets and a zvol that I was using for iSCSI for several virtual machines but I am receiving the error below when trying to delete.

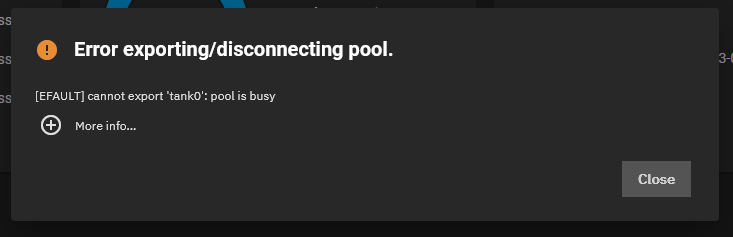

Error: concurrent.futures.process._RemoteTraceback: “”" Traceback (most recent call last): File “/usr/lib/python3/dist-packages/middlewared/plugins/zfs.py”, line 210, in export zfs.export_pool(pool) File “libzfs.pyx”, line 465, in libzfs.ZFS.exit File “/usr/lib/python3/dist-packages/middlewared/plugins/zfs.py”, line 210, in export zfs.export_pool(pool) File “libzfs.pyx”, line 1340, in libzfs.ZFS.export_pool libzfs.ZFSException: cannot export ‘tank0’: pool is busy During handling of the above exception, another exception occurred: Traceback (most recent call last): File “/usr/lib/python3.9/concurrent/futures/process.py”, line 243, in _process_worker r = call_item.fn(*call_item.args, **call_item.kwargs) File “/usr/lib/python3/dist-packages/middlewared/worker.py”, line 115, in main_worker res = MIDDLEWARE._run(*call_args) File “/usr/lib/python3/dist-packages/middlewared/worker.py”, line 46, in _run return self._call(name, serviceobj, methodobj, args, job=job) File “/usr/lib/python3/dist-packages/middlewared/worker.py”, line 40, in _call return methodobj(*params) File “/usr/lib/python3/dist-packages/middlewared/worker.py”, line 40, in _call return methodobj(*params) File “/usr/lib/python3/dist-packages/middlewared/schema.py”, line 1322, in nf return func(args, **kwargs) File “/usr/lib/python3/dist-packages/middlewared/plugins/zfs.py”, line 212, in export raise CallError(str(e)) middlewared.service_exception.CallError: [EFAULT] cannot export ‘tank0’: pool is busy “”" The above exception was the direct cause of the following exception: Traceback (most recent call last): File “/usr/lib/python3/dist-packages/middlewared/job.py”, line 426, in run await self.future File “/usr/lib/python3/dist-packages/middlewared/job.py”, line 461, in __run_body rv = await self.method(([self] + args)) File “/usr/lib/python3/dist-packages/middlewared/schema.py”, line 1318, in nf return await func(*args, **kwargs) File “/usr/lib/python3/dist-packages/middlewared/schema.py”, line 1186, in nf res = await f(*args, **kwargs) File “/usr/lib/python3/dist-packages/middlewared/plugins/pool.py”, line 1668, in export await self.middleware.call(‘zfs.pool.export’, pool[‘name’]) File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1386, in call return await self._call( File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1343, in _call return await self._call_worker(name, *prepared_call.args) File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1349, in _call_worker return await self.run_in_proc(main_worker, name, args, job) File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1264, in run_in_proc return await self.run_in_executor(self.__procpool, method, *args, **kwargs) File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1249, in run_in_executor return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs)) middlewared.service_exception.CallError: [EFAULT] cannot export ‘tank0’: pool is busy

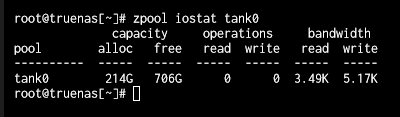

I can’t think of anything using the pool, dataset, or zvol but I know there is activity when I check it with zpool iostat tank0. I even shut down my virtualization server just in case there was still some kind of strange connection to back to TrueNAS.

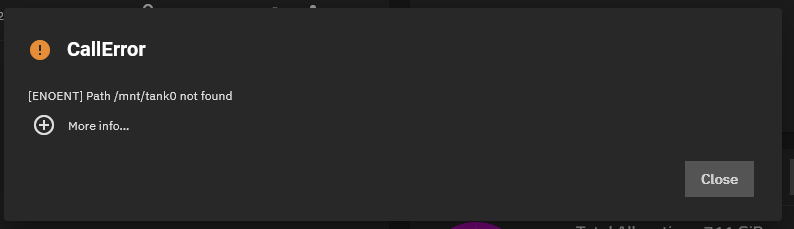

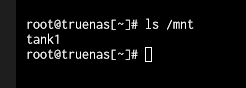

Also, when I go to the dataset page and look at pool tank0 it tells me the path can’t be found. I confirmed this in the shell as well.

Things I have tried:

- Restarting TrueNAS

- Made sure iSCSI service is turned off

- Removed all confirguration from iSCSI except the Target Global Configuration (which I believe is required)

- Made sure tank0 is not used as a system pool

- Deleting the pool from the shell with the force tag (received the same error)

Is there anything I’m overlooking? Or something else I need to try?

Thank you!