Sure! (Also, @FredFerrell thank you so much for your continued efforts in this!)

First, Host-Freenas (I could only install iperf on the Xenserver, not iperf3)

root@freenas[~]# iperf -s

Server listening on TCP port 5001

TCP window size: 256 KByte (default)[ 4] local 193.0.0.1 port 5001 connected with 193.0.0.101 port 37824

[ ID] Interval Transfer Bandwidth

[ 4] 0.0-10.0 sec 10.2 GBytes 8.77 Gbits/sec

[15:30 xcp-Nebula ~]# iperf -c 193.0.0.1

Client connecting to 193.0.0.1, TCP port 5001

TCP window size: 724 KByte (default)[ 3] local 193.0.0.101 port 37824 connected with 193.0.0.1 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 10.2 GBytes 8.79 Gbits/sec

[16:13 xcp-Nebula ~]#

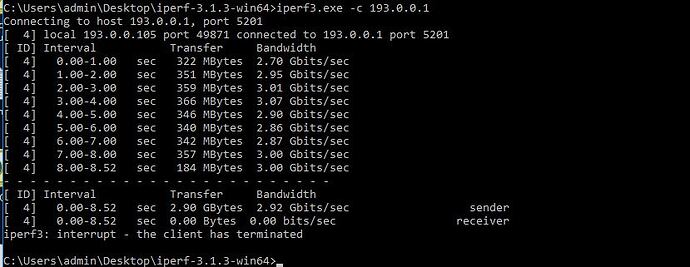

Now from Windows VM, using exact same NIC from above

root@freenas[~]# iperf3 -s

Server listening on 5201

Accepted connection from 193.0.0.105, port 49870

[ 5] local 193.0.0.1 port 5201 connected to 193.0.0.105 port 49871

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 321 MBytes 2.69 Gbits/sec

[ 5] 1.00-2.00 sec 351 MBytes 2.95 Gbits/sec

[ 5] 2.00-3.00 sec 359 MBytes 3.01 Gbits/sec

[ 5] 3.00-4.00 sec 366 MBytes 3.07 Gbits/sec

[ 5] 4.00-5.00 sec 345 MBytes 2.90 Gbits/sec

[ 5] 5.00-6.00 sec 340 MBytes 2.86 Gbits/sec

[ 5] 6.00-7.00 sec 342 MBytes 2.87 Gbits/sec

[ 5] 7.00-8.00 sec 357 MBytes 3.00 Gbits/sec

[ 5] 7.00-8.00 sec 357 MBytes 3.00 Gbits/sec

[ ID] Interval Transfer Bitrate

[ 5] 0.00-8.00 sec 2.90 GBytes 3.11 Gbits/sec receiver

iperf3: the client has terminated