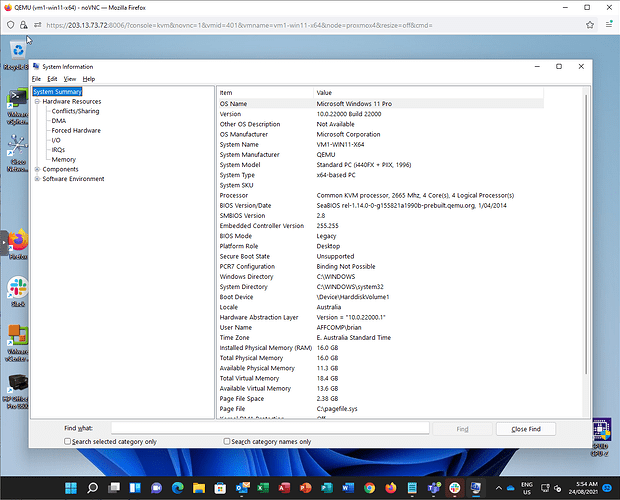

I am already running win 11 VM’s on Proxmox with X5570 CPU’s.

NOT using any hacks or leaked iso’s. Just upgraded via windows Insider.

What version of TPM (if any) is your host running?

Also you said upgraded, does that mean you had win10 running on there before? An upgrade might look at the base OS and keep the pieces it needed to stay running vs. the clean installs that testers have mentioned need TPM 2.0 or higher. Just going on what I’ve read because I don’t have time to mess with it right now.

Thereis no tpm. My understanding is currently that TPM is NOT required when running as a VM.

Yes, these were win 10 vm’s that were upgraded to Win 11.

@Penfold that’s interesting.

I just noticed that on my vmware workstation 16 TPM can be added to a virtual machine, the host is a 6th gen intel so in principle Win11 won’t install on the host but might in a vm.

Also found this:

“Microsoft recognizes that the user experience when running the Windows 11 in virtualized environments may vary from the experience when running non-virtualized. So, while Microsoft recommends that all virtualized instances of the Windows 11 follow the same minimum hardware requirements as described in Section 1.2, the Windows 11 does not apply the hardware-compliance check for virtualized instances either during setup or upgrade. Note that, if the virtualized environment is provisioned such that it does not meet the minimum requirements, this will have an impact to aspects of the user experience when running the OS in the virtualized environment.”

An update on this. MS is starting to enforce hardware requirements on vm’s.

Proxmox virtual tpm 2.0 is not quite ready yet either.

I’m running a bunch of R720s with ESXi 6.7 - no issues. I agree with the previous reply re: doing a RAID10 with (4) 500GB SSDs - cheap and reasonably fast.

I run a bunch of customers on ESXi free - it is reliable and easy to manage if you only need a handful of hosts. The only real limitation I have ever run into is the lack of vMotion. ESXi free has no good way to move VMs between hosts quickly/efficiently.

We have some clients on Esxi free. We’ve also run into some msp backup products that don’t work smoothly with Esxi snap shots.

True - some backup products won’t play with ESXi free. We use Synology ABFB and Nakivo - both work without an issue.

I for one prefer a standard Debian server with KVM hypervisor for server and virt-manager for a Linux desktop. Mine is completely a barebones setup without the web interfaces. virt-manager is actually fully-featured as it allows for passing hard drives to my file storage virtual machine.

Note however, that for block devices such as /dev/sda and /dev/sdb, these block devices can change randomly, so a 2TB hard drive might be assigned to /dev/sda and 4TB hard drive could be assigned to /dev/sdb. However, upon next boot, the drives could be in a different order. How? Try executing this command and you will get an output similar to mine:

ls -l /dev/disk/by-id/ | grep "sd.$"

lrwxrwxrwx 1 root root 9 Aug 22 18:00 ata-ST2000LM003_HN-M201RAD_S34RJ9FG202386 -> ../../sda

lrwxrwxrwx 1 root root 9 Aug 22 18:00 ata-ST4000LM024-2AN17V_WFG1G0AB -> ../../sdb

So in virt-manager, to pass the hard drives into the VM, specify the full path to the IDs that have been symlinked to standard block devices such as /dev/sdX (“X” is any character). These IDs will never change upon a reboot of a home server. Before you begin the installation of a virtual machine, make sure to check the checkbox for showing advanced settings. And be sure to specify a bridge for your network if desktop computers or any other hosts are going to access the VMs. In Advanced Settings, clicking “Add Hardware” will allow you to add two hard drives to the VM.

If you have a lot of advanced experience with Linux and you can do away with a web interface, I’d say go with KVM as a hypervisor and virt-manager as a management client for connecting to the remote server.

For starters, I’d recommend setting up a dedicated user for managing virtual machines.

id kvmguests

uid=1001(kvmguests) gid=1001(kvmguests) groups=1001(kvmguests),106(kvm),113(libvirt),64055(libvirt-qemu)

In a Debian server, the ones to pay attention to are the last three groups that needed to be added. Root privileges are not needed and it shouldn’t be needed anyway.

kvmlibvirtlibvirt-qemu

To create a dedicated user as root:

useradd -d /data/vm -c "KVM Guests" -G kvm,libvirt,libvirt-qemu -s /bin/bash kvmguests

Oh, and I almost forgot: set a passwd (password for kvmguests and setup a public key authentication for that user. There are plenty of articles out there for setting public key authentication for SSH.

That way, you won’t be running virtual machines as root. Just be sure to give the folder above ownership for kvmguests after the installation is complete. I use /data/vm as a mount point for my 500GB NVMe drive for running KVM virtual machines and LXC (non-LXD) Linux containers) which reside in /data/vm/lxc that is a symbolic link to /var/lib/lxc. And because I have enough room in a 120GB NVMe for my OS drive, I reserved about 70GB for /data/vm/iso. That left me enough room for more virtual machines in a 500GB drive.

I hope I can be of help. There are lots of options in this thread. I did not realize this thread was started a couple of months ago.

I was running libvirt/KVM/QEMU/virt-manager with scripts for backups, etc for a couple of years for my own internal stuff while I was setting up little ESXi free environments for clients all day long - one day I just stopped and set up a couple of ESXi hosts for myself and a pair of Synologys with ABFB and replication and I couldn’t figure out why I didn’t do it sooner. It is just easier.